Project highlights

Task

Prototype a multimodal loan journey (voice + text + Generative UI)

Team

1 UX Engineer, 2 UX/UI Designers, 1 AI Service Designer, 1 Coordinator

Duration

2.5 weeks (initial sprint) → 10-week refinement post-demo

Scope

Discovery, multimodal patterns, Generative UI, live prototype, agent/tool orchestration, real-time voice testing, observability mock

About the client

A leading consumer finance platform helping millions manage everyday money (from credit and investments to insurance and shopping) inside one super app.

Serving 200M+ customers, 4,000+ service locations, and 50+ financial offerings, it supports both digital natives and first-time users. Backed by a strong innovation center and deep AI/cloud partnerships, the company is now transitioning from fintech success to FinAI pioneer.

What the client needed

The company had launched 100+ AI use cases, with another 100+ in progress, but their existing digital products still felt like traditional fintech. They partnered with us to prototype an AI-native loan experience that would:

-

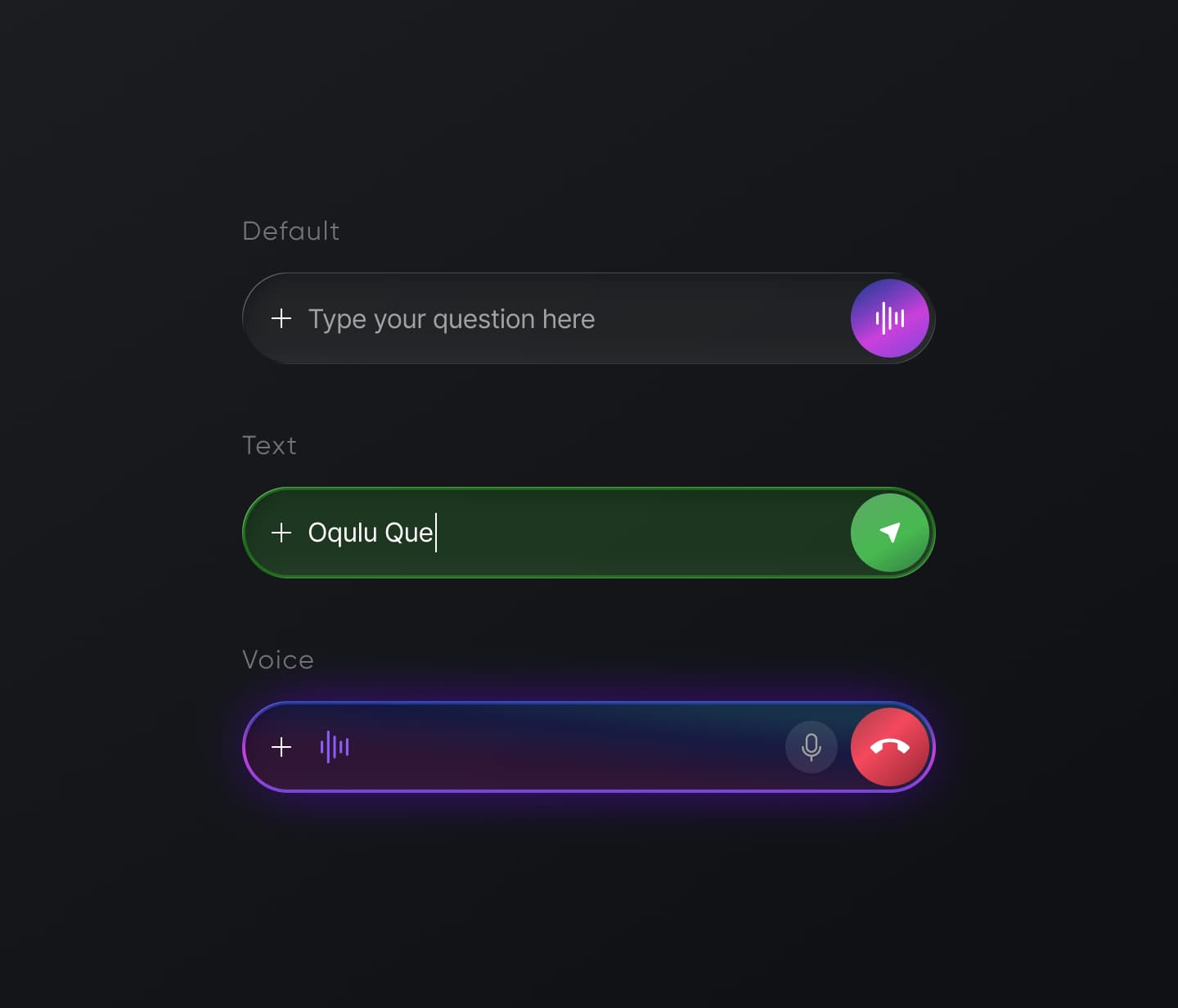

work fluidly across voice, text, and tap, with no dead ends;

-

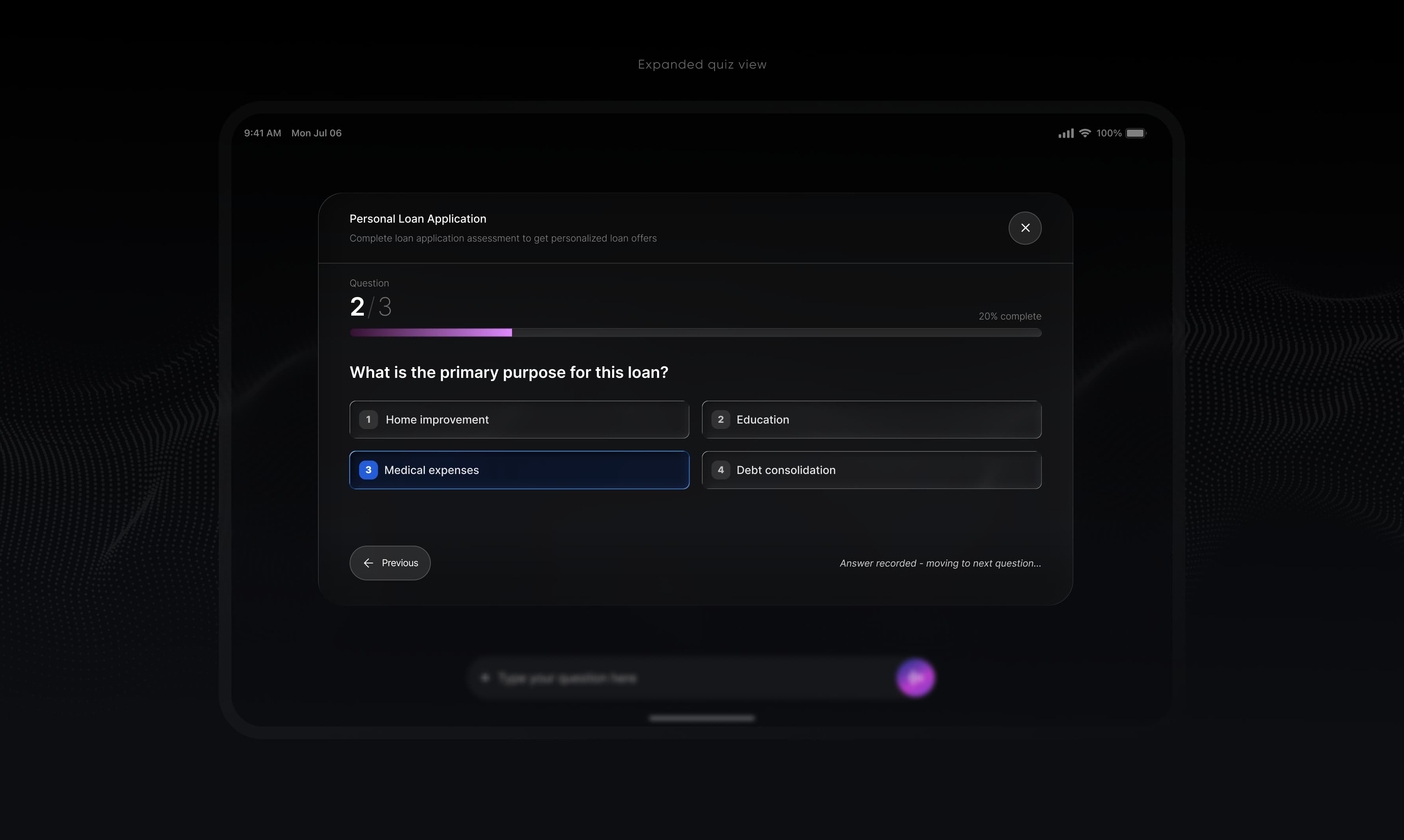

reduce friction in sensitive steps like credit checks and loan applications;

-

build trust with clear states and explicit confirmations;

-

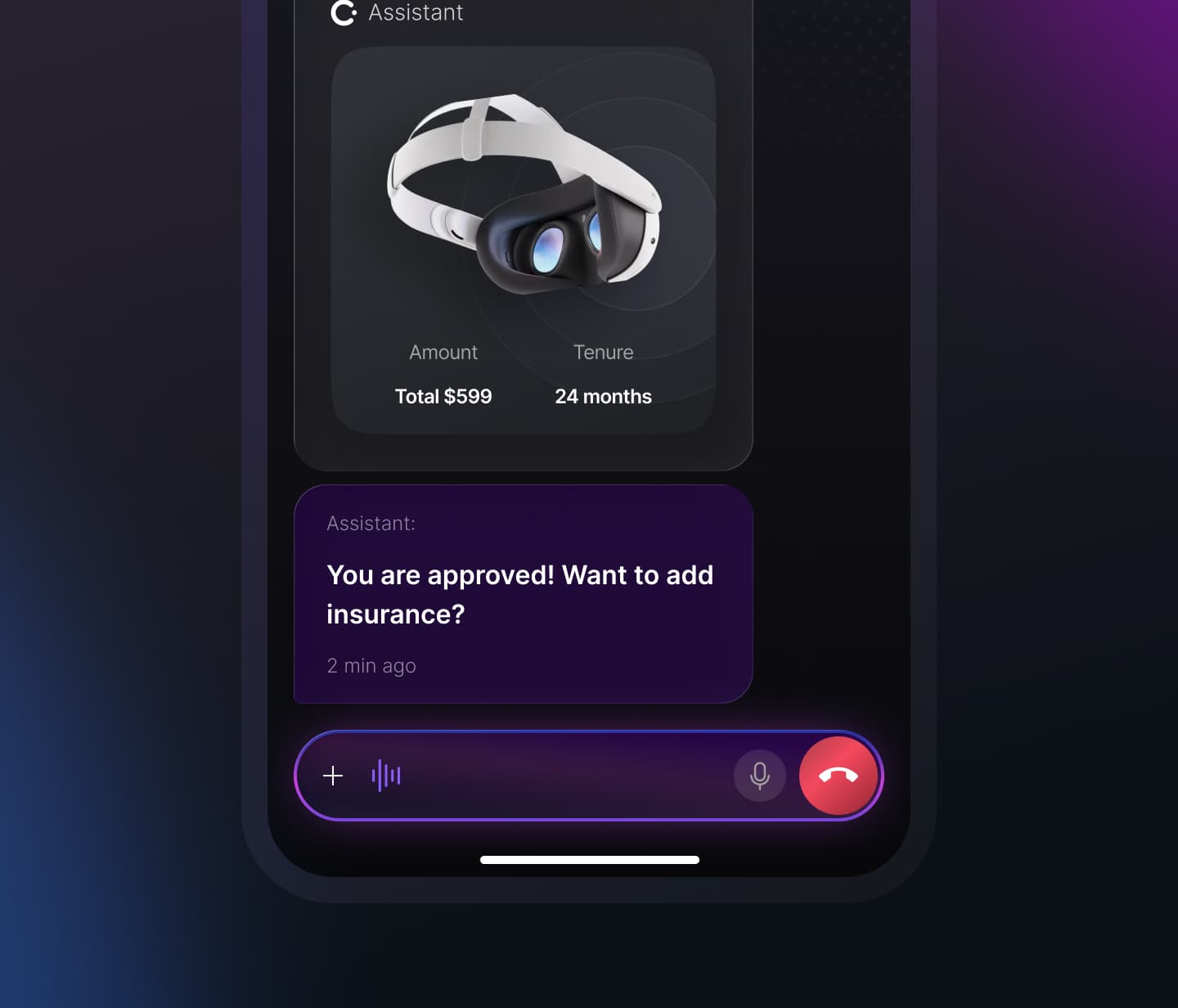

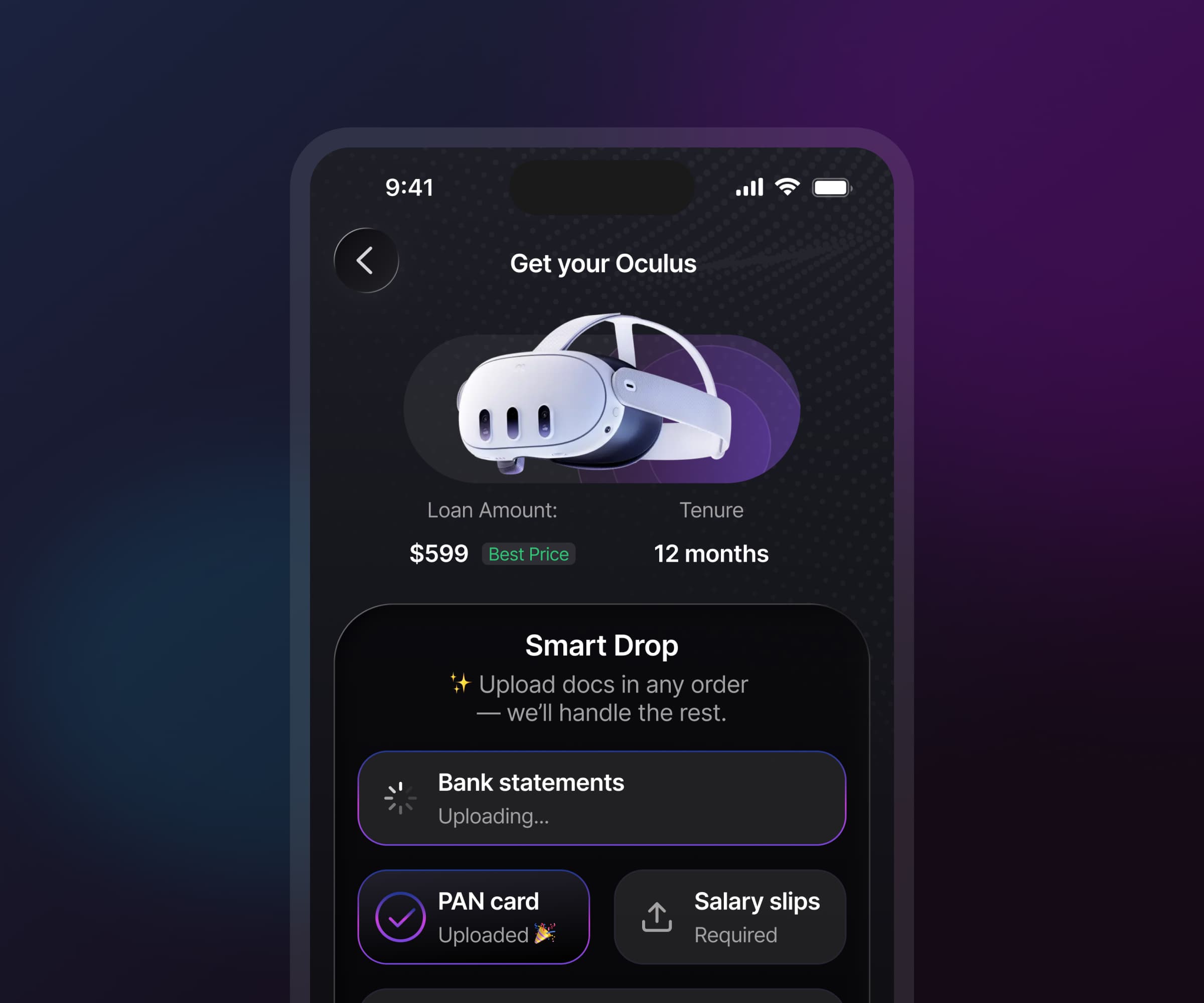

showcase Generative UI inside the conversation;

-

stay brand-agnostic and mobile-first;

-

demonstrate real-time orchestration across tools and modalities.

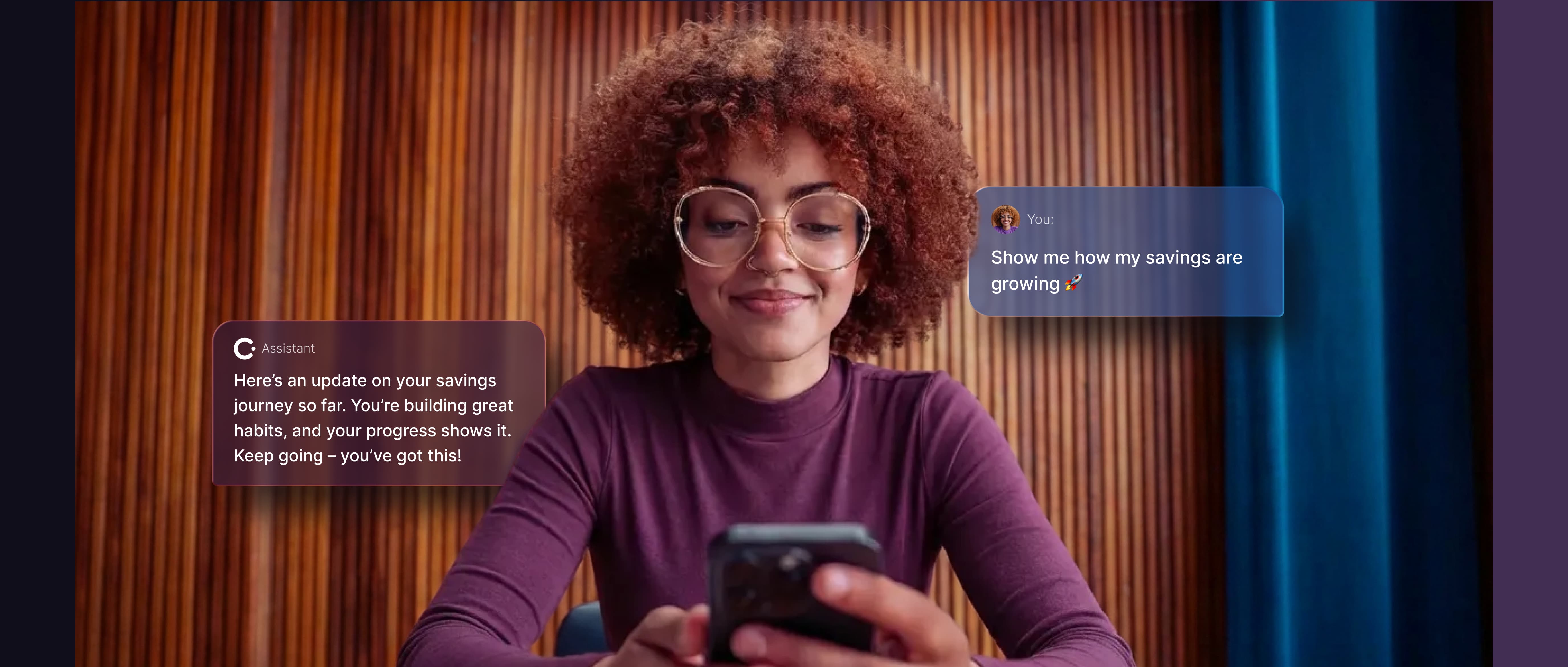

We aimed to go beyond screens, building a conversational layer and a Generative UI system that would demonstrate how voice user interface design can enable complex financial interactions.

Challenges

- voice, text, and UI drifted out of sync in early tests, breaking the illusion of a single conversation;

- sub-second voice latency was required, as anything over 2-3s felt unusable;

- financial forms had too many steps at once, risking cognitive overload;

- users needed clarity about what the assistant was doing behind the scenes;

- voice sessions sometimes failed to end cleanly, requiring rules for turn-taking;

- existing GenAI+ voice SDKs lacked observability, complicating debugging and UX validation.

Results

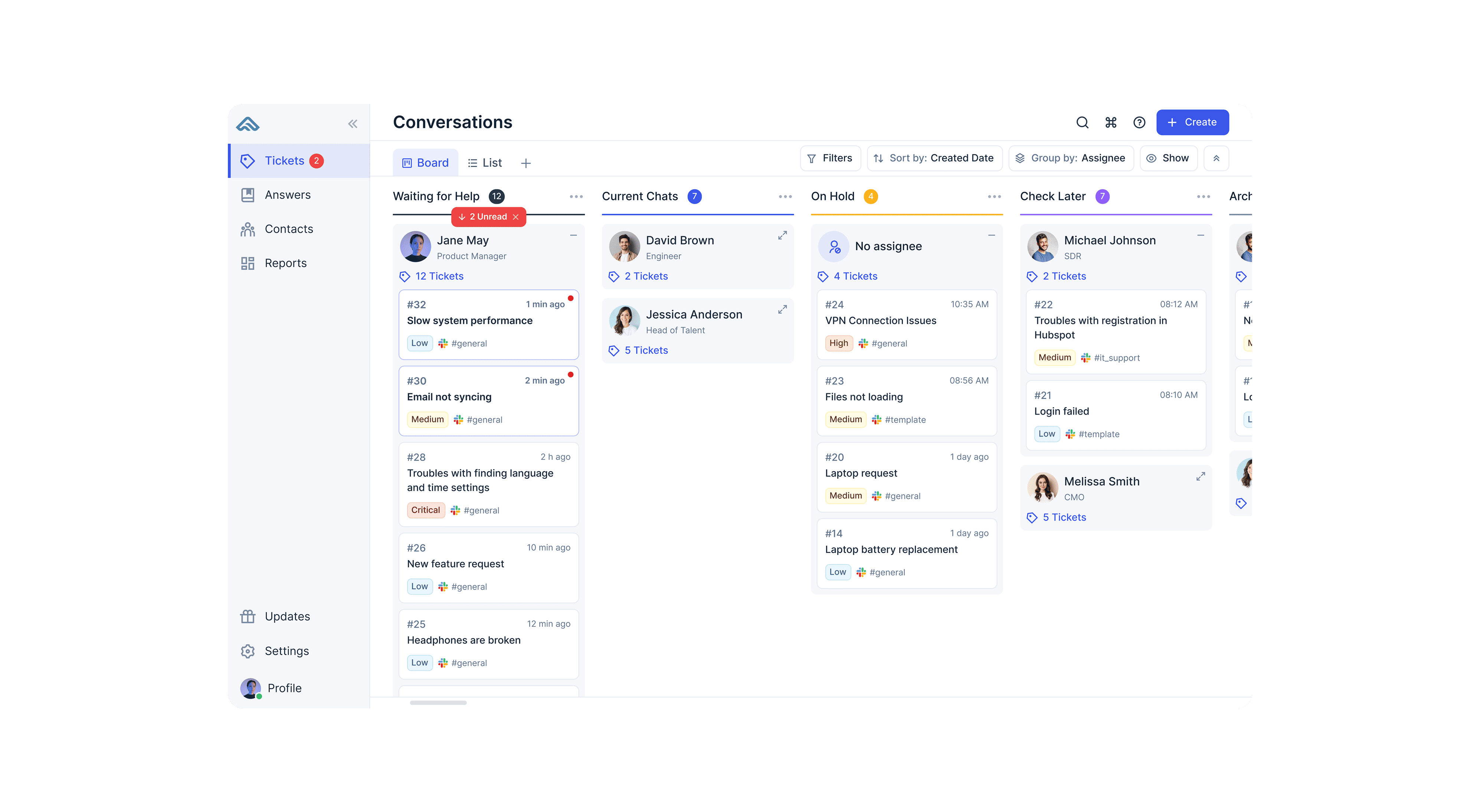

- a working multimodal loan prototype demonstrating real-world conversational finance;

- seamless switching between voice → text → tap with preserved conversational context;

- explicit consent flows for sensitive actions (credit checks, identity lookups);

- Generative UI for EMIs, loan comparisons, and financial insights;

- 11+ core actions covered (from voice-start loan flow to EMI exploration);

- latency and orchestration validated in live tests under production-like conditions.