Project highlights

Task

Prototype a multimodal loan journey (voice + text + Generative UI)

Team

1 UX Engineer, 2 UX/UI Designers, 1 AI Service Designer, 1 Coordinator

Duration

2.5 weeks (initial sprint) → 10-week refinement post-demo

Scope

Discovery, multimodal patterns, Generative UI, live prototype, agent/tool orchestration, real-time voice testing, observability mock

About the client

A leading consumer finance platform helping millions manage everyday money (from credit and investments to insurance and shopping) inside one super app.

Serving 200M+ customers, 4,000+ service locations, and 50+ financial offerings, it supports both digital natives and first-time users. Backed by a strong innovation center and deep AI/cloud partnerships, the company is now transitioning from fintech success to FinAI pioneer.

What the client needed

The company had launched 100+ AI use cases, with another 100+ in progress, but their existing digital products still felt like traditional fintech. They partnered with us to prototype an AI-native loan experience that would:

-

work fluidly across voice, text, and tap, with no dead ends;

-

reduce friction in sensitive steps like credit checks and loan applications;

-

build trust with clear states and explicit confirmations;

-

showcase Generative UI inside the conversation;

-

stay brand-agnostic and mobile-first;

-

demonstrate real-time orchestration across tools and modalities.

We aimed to go beyond screens, building a conversational layer and a Generative UI system that would demonstrate how voice user interface design can enable complex financial interactions.

Challenges

- voice, text, and UI drifted out of sync in early tests, breaking the illusion of a single conversation;

- sub-second voice latency was required, as anything over 2-3s felt unusable;

- financial forms had too many steps at once, risking cognitive overload;

- users needed clarity about what the assistant was doing behind the scenes;

- voice sessions sometimes failed to end cleanly, requiring rules for turn-taking;

- existing GenAI+ voice SDKs lacked observability, complicating debugging and UX validation.

Results

- a working multimodal loan prototype demonstrating real-world conversational finance;

- seamless switching between voice → text → tap with preserved conversational context;

- explicit consent flows for sensitive actions (credit checks, identity lookups);

- Generative UI for EMIs, loan comparisons, and financial insights;

- 11+ core actions covered (from voice-start loan flow to EMI exploration);

- latency and orchestration validated in live tests under production-like conditions.

Our approach to voice user interface design .

We worked with the client’s innovation team at startup speed – just 2.5 weeks from idea to demo. The initial sprint focused on one high-impact use case: how an everyday loan journey could feel in an AI-native world.

We kicked off with discovery, scanning regulatory signals, market patterns, and multimodal UX trends using AI-accelerated research. This fed into a cross-functional brainstorming session that aligned us on clarity and control as core principles.

Execution happened in three fast-moving tracks that ran in parallel:

-

tech feasibility: a coded POC in Next.js, Convex, and ElevenLabs, with an evolving agent-orchestration and observability framework for tool testing;

-

UX: defining rules for "who has the floor," turn detection, and voice user interface design recovery patterns between voice, text, and tap;

-

UI: early experiments with Generative UI cards, quizzes, and calculators.

We skipped static mocks in favor of a live demo. Following the sprint, the prototype continued evolving through a 10-week refinement phase, transforming into a production-ready foundation for multimodal finance.

🎥 See the assistant in action

Join our CEO Yuriy for a quick, no-obligation demo where he walks through the prototype and key insights.

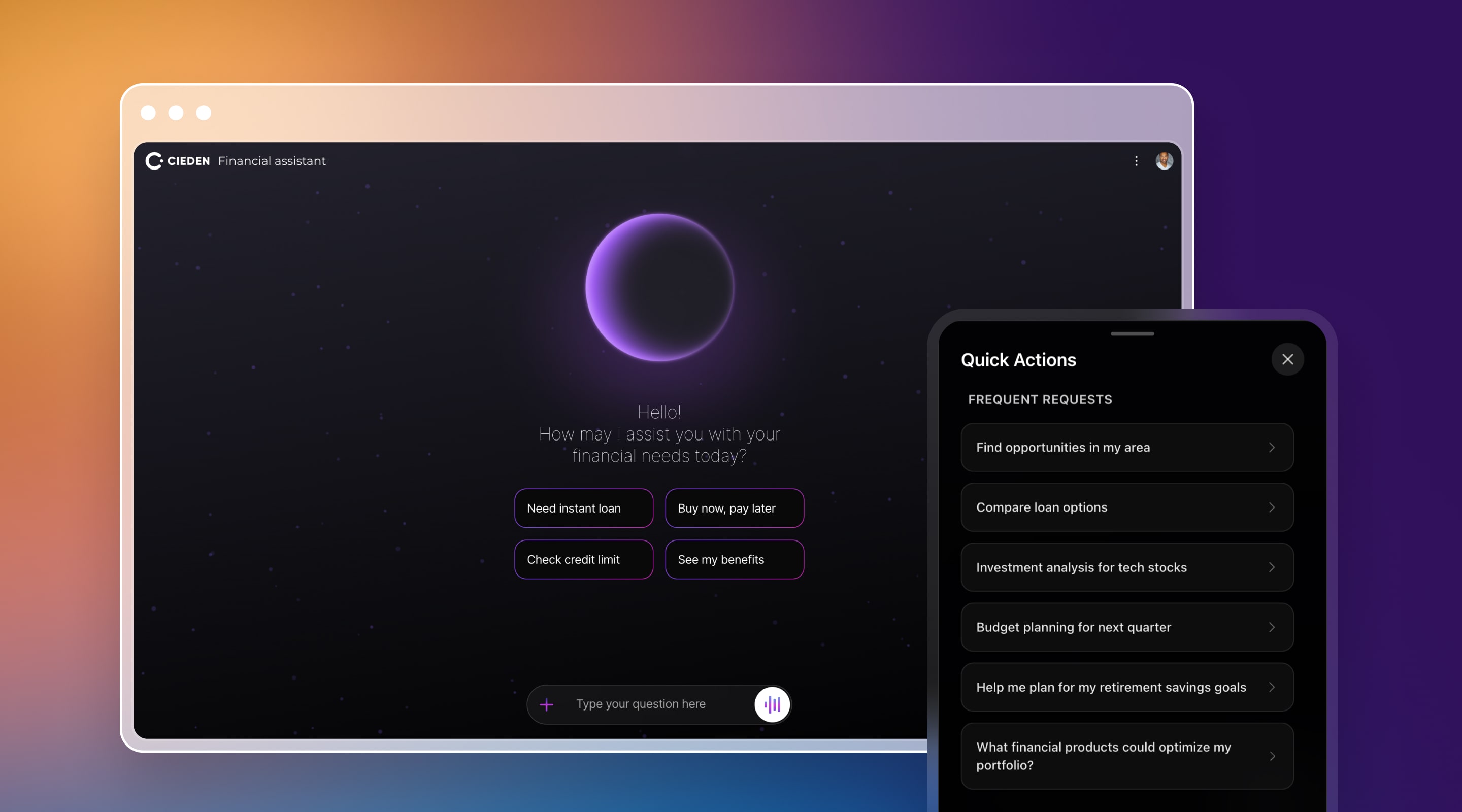

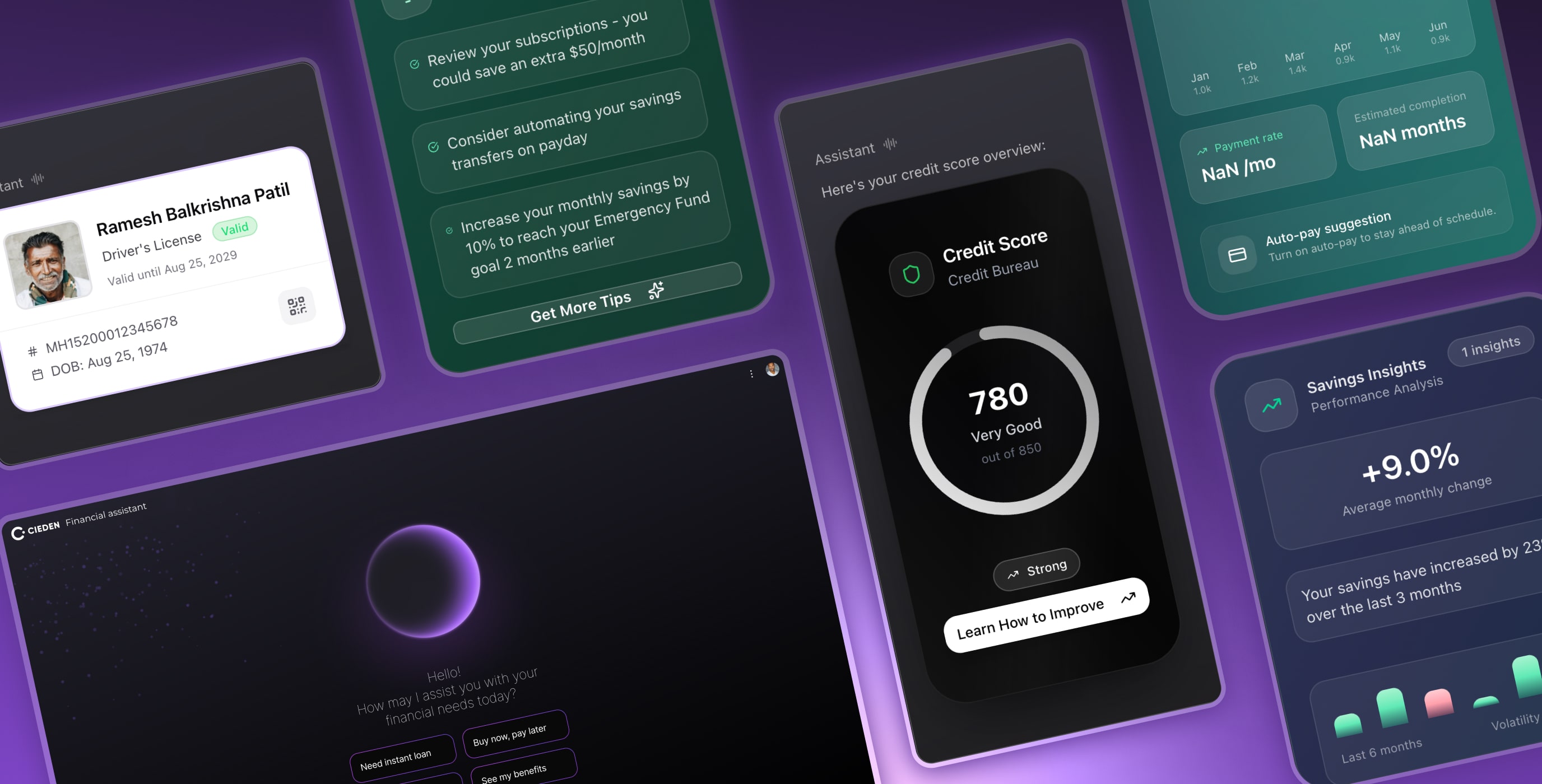

Multimodal interaction flow

FinPilot was designed around a single principle: the user should never need to think about which mode they are in. Requests could start in voice, continue with typing, and end with a quick tap, all within one conversation.

To achieve this, we created a multimodal continuity model, defining how context is preserved and how the assistant behaves when modes switch:

-

continuous context across all three modes, with no resets;

-

live text bar active during voice, enabling mid-speech corrections;

-

quick-action shortcuts for mode switching;

-

conversation bubbles combining spoken and typed turns;

-

behavioral rules for switching (e.g., when typing interrupts voice, when voice resumes after taps).

Multilingual voice support

The client operates across diverse linguistic markets, so we validated whether the flow could remain stable as users switched languages mid-conversation.

We introduced a language-flex model: voice detection → immediate language state update → context carries forward without resetting the thread.

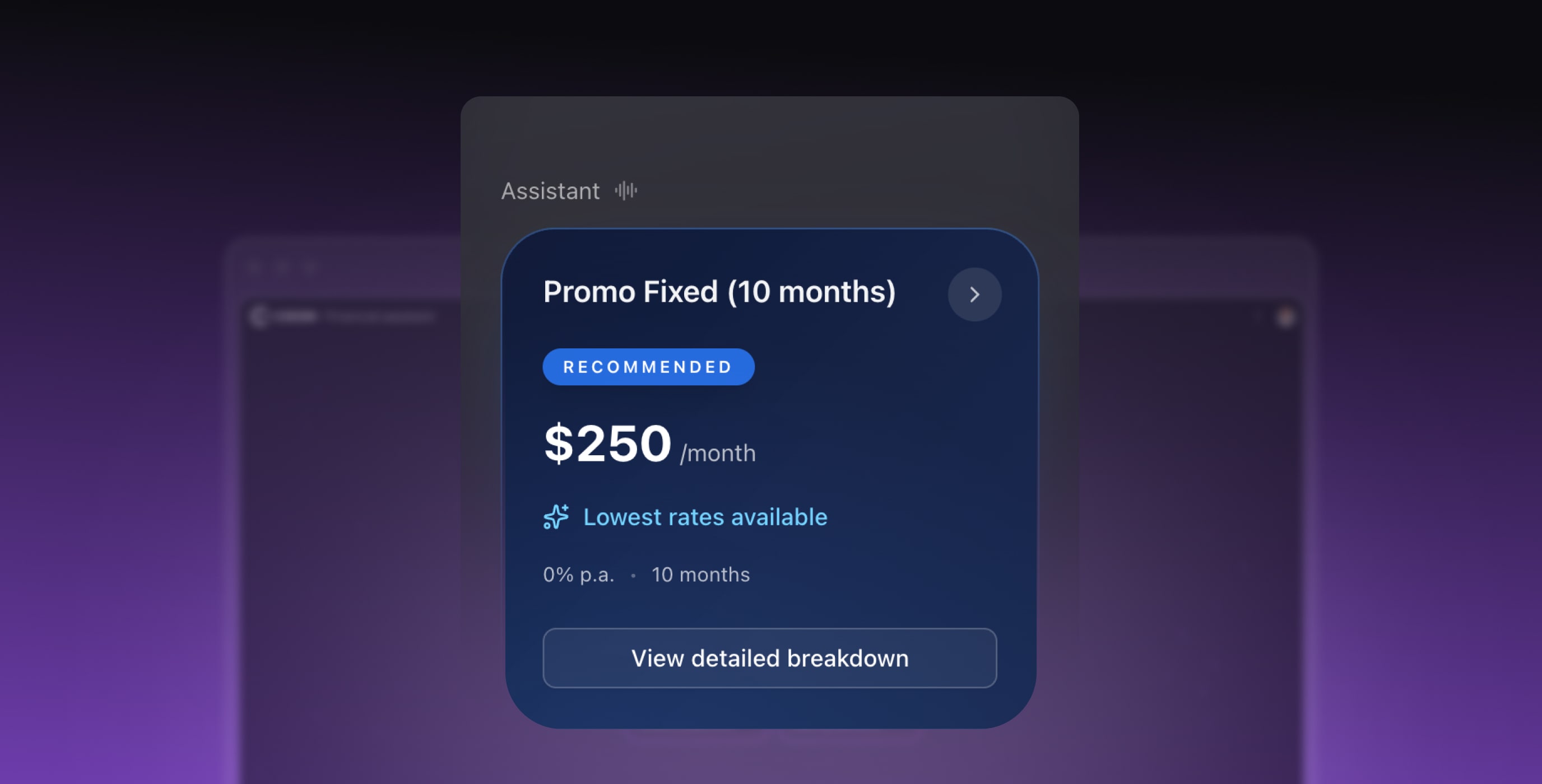

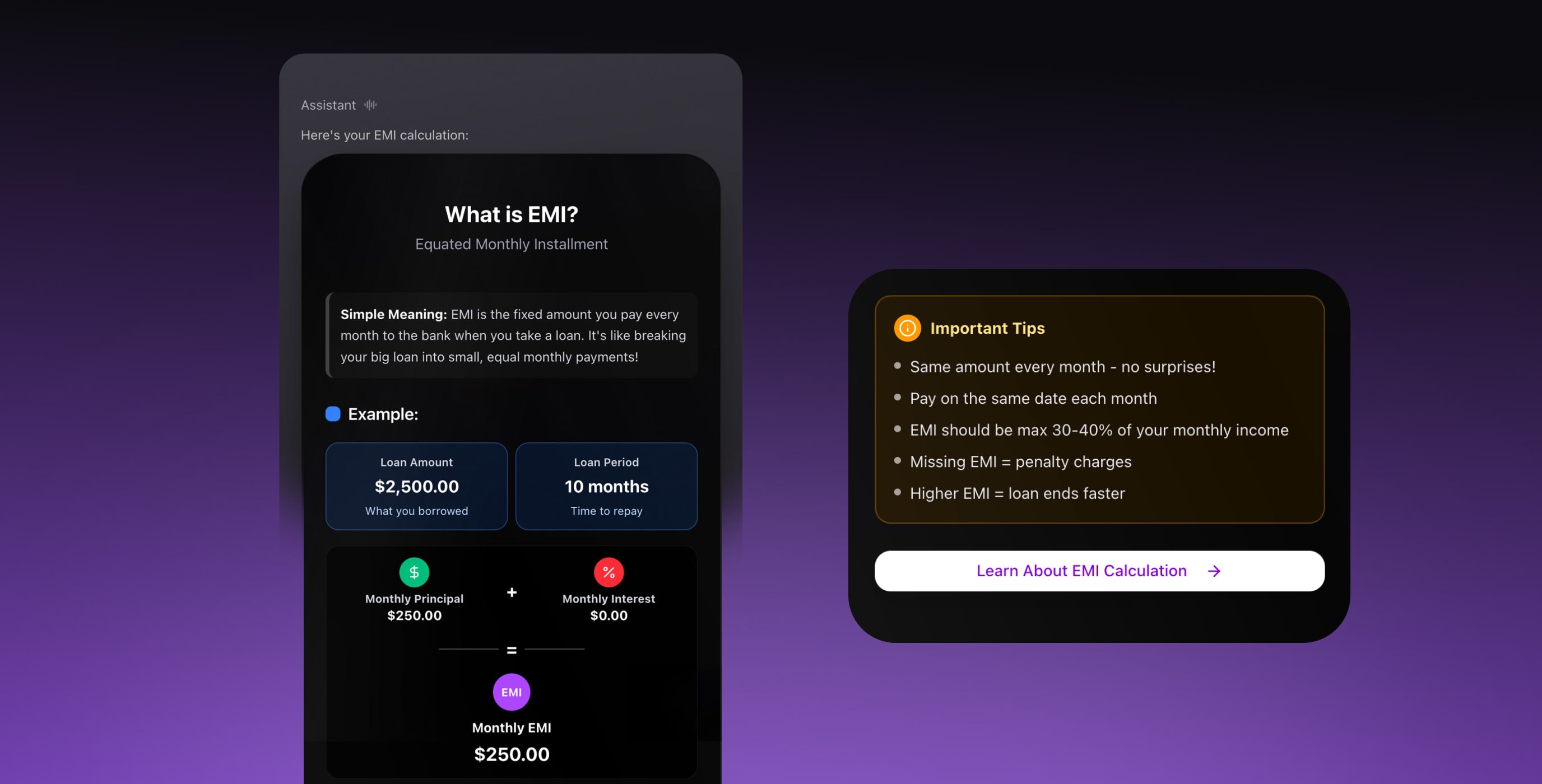

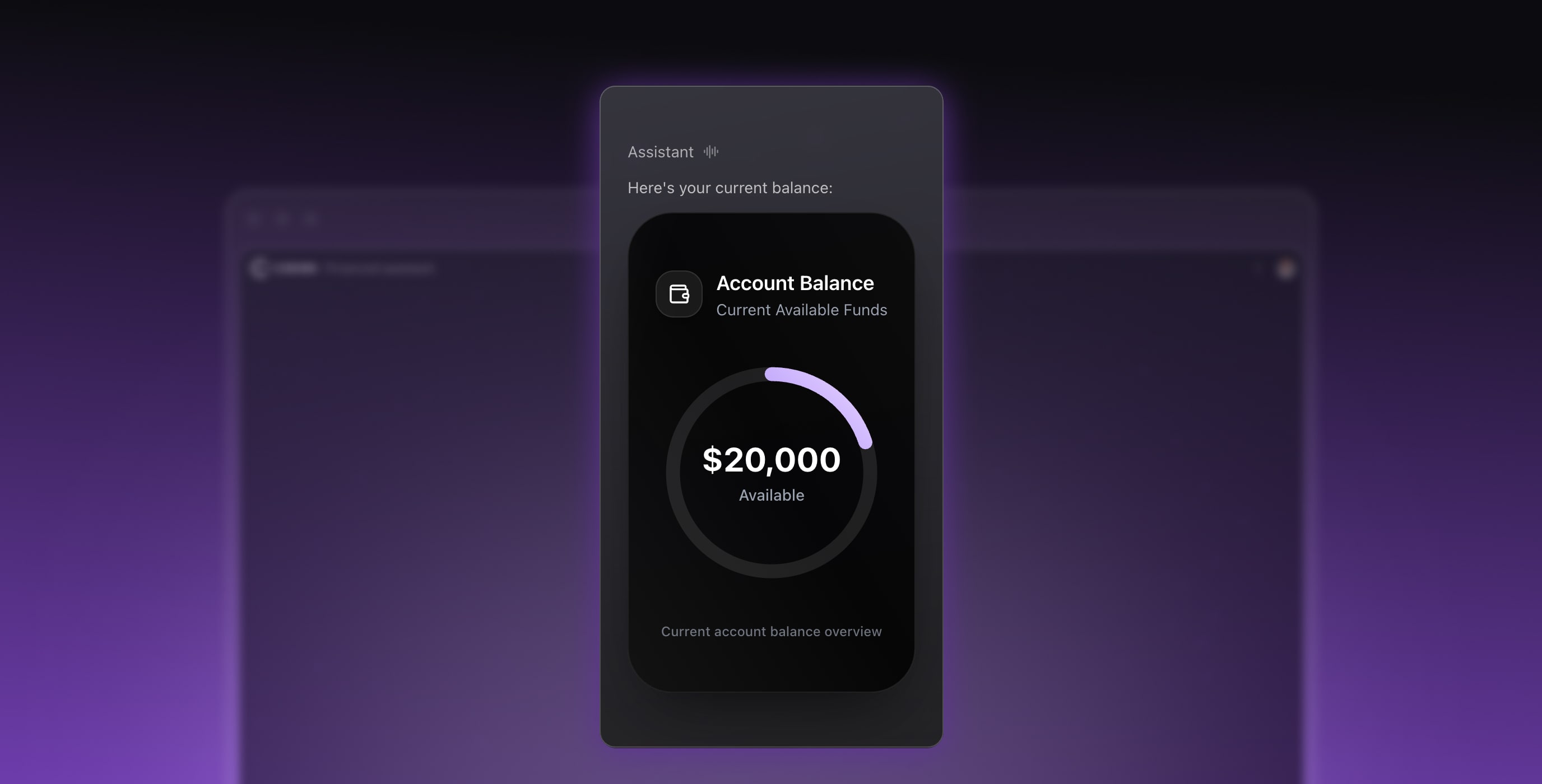

Generative UI components

The UI was generated in response to the conversation. This required a generative layout model that defined how financial information expands and transforms based on user intent.

Instead of static forms, the assistant generated interface elements in real time: loan cards, calculators, and sliders appeared directly in the thread, turning financial details into touchable interactions users could follow at a glance:

-

loan comparison cards with “recommended” labels and at-a-glance EMIs;

-

interactive EMI breakdowns and repayment timelines;

-

secure document cards surfaced only when needed;

-

expandable details using progressive disclosure to reduce overload.

Guided loan quiz

When users weren’t sure which loan to pick, FinPilot shifted from open-ended conversation into a guided quiz, following a decision-scaffold pattern. Instead of filling out a long form, they just needed to answer five short questions with a visible progress bar:

-

single-choice steps replacing long, multi-screen forms;

-

real-time adaptation (answers fed directly into loan eligibility and EMI logic);

-

final recommendation tailored to user inputs.

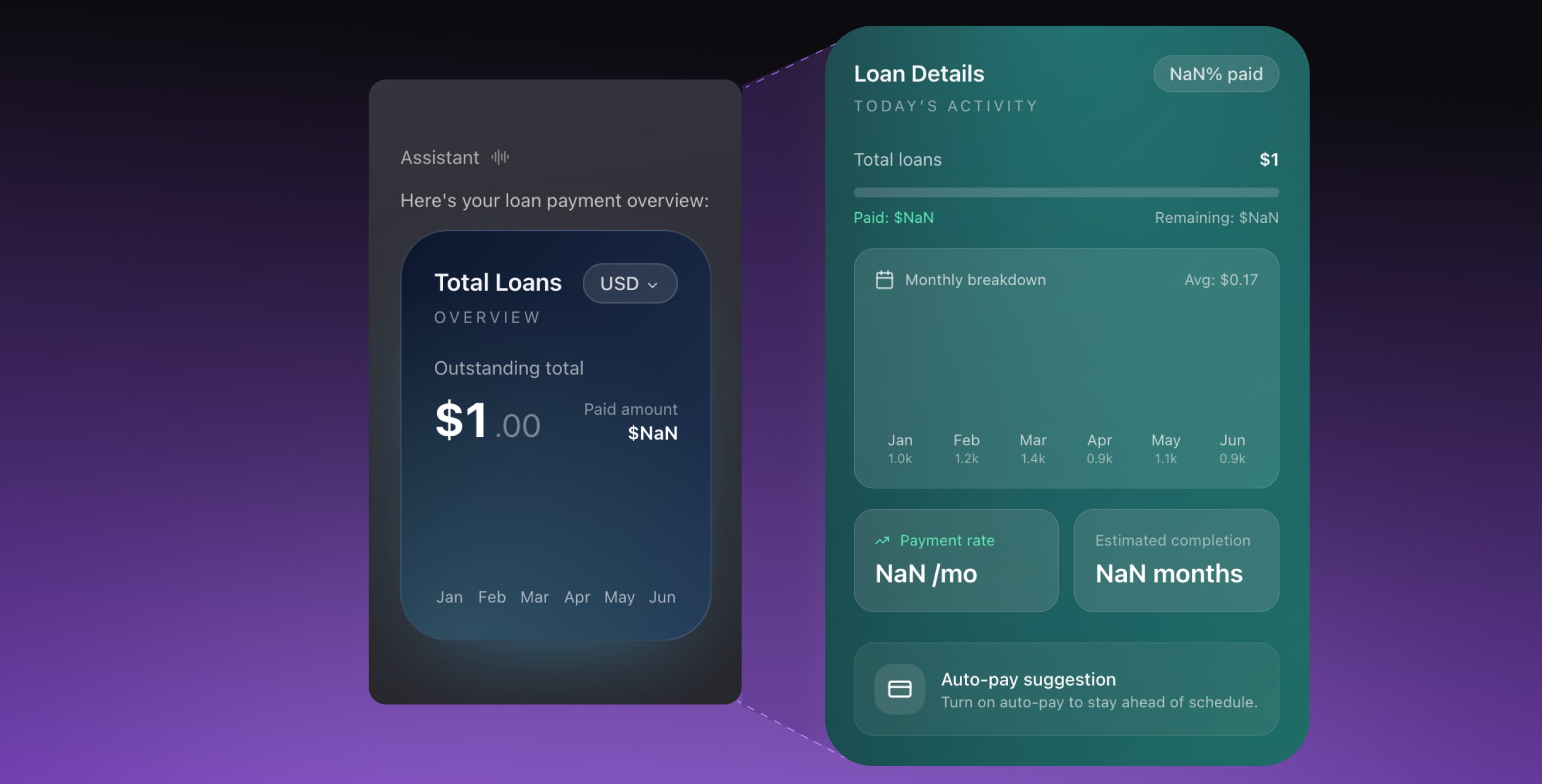

Financial guidance

Financial information can overwhelm users, so the flow applied a focused-card model: instead of dashboards, the assistant surfaced one actionable insight at a time. Cards were generated contextually inside the conversation:

- smart tips with suggested next actions;

-

history snapshots showing month-over-month progress;

-

voice-triggered charts (e.g., “Show me my loan history”)

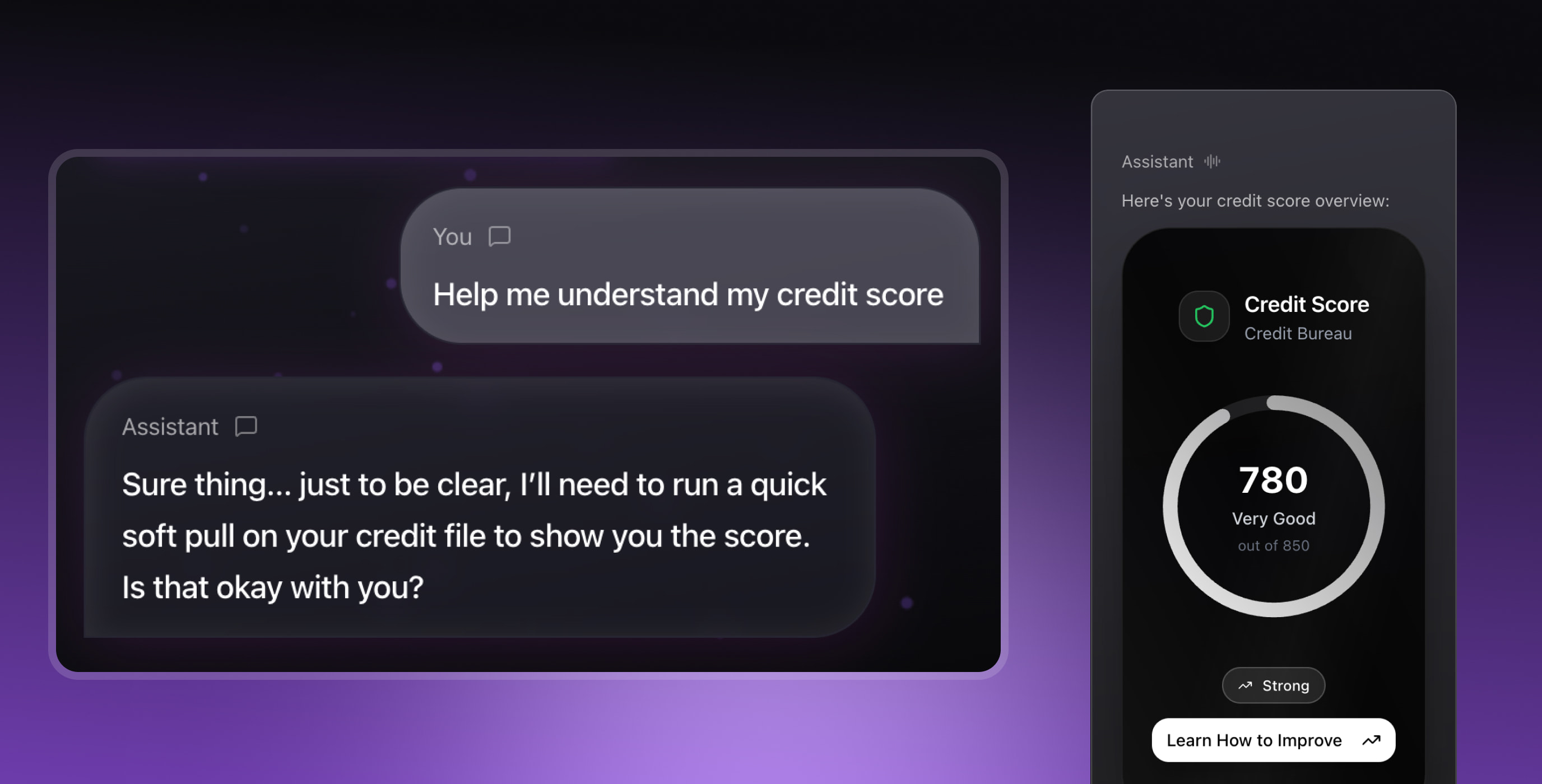

Trust & privacy

Sensitive financial steps needed a transparent-action pattern, making sure users always knew what the assistant was doing, why, and with what data. We designed trust-building behaviors directly into the flow:

-

explicit consent prompts before credit checks;

-

sensitive data shown only after a user approval event;

-

consistent visual + voice cues during background actions.

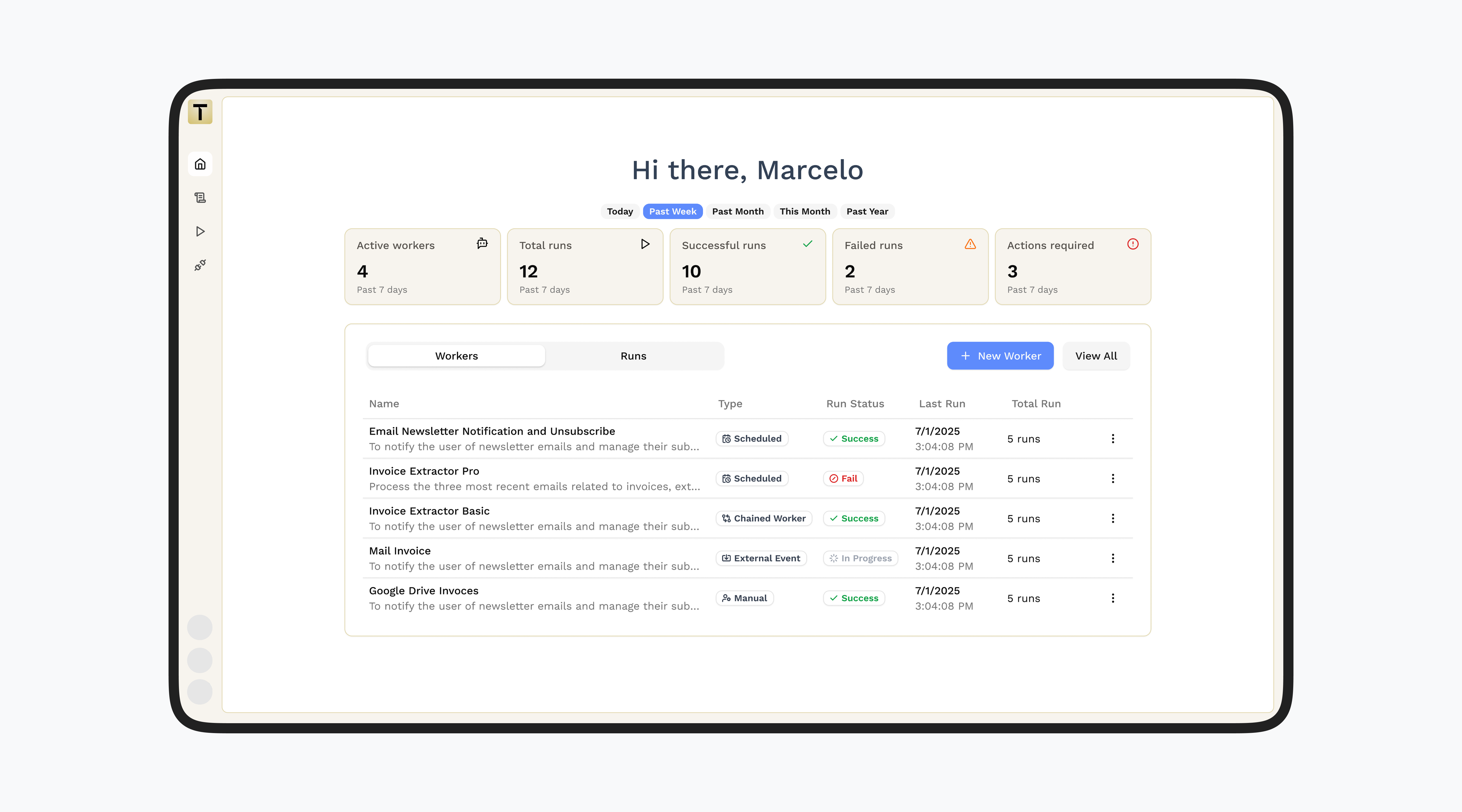

Testing & observability tools

To prototype quickly without sacrificing reliability, we built a testing and observability layer that let designers, engineers, and researchers inspect and trigger tools during live sessions.

These tools let us debug voice disconnects and validate complex flows long before wiring everything into an agent-driven path:

-

Wizard-of-Oz trigger panel for invoking tools;

-

real-time logs for events, errors, latency, and tool calls;

-

manual override mode to test UI components independently;

-

voice session metrics for turn reliability and disconnect patterns;

-

full session replay for analyzing intent/behavior mismatches.

🔍 How we built a multimodal voice + Gen UI banking experience in 2.5 weeks

A behind-the-scenes look at designing seamless voice, text, and Generative UI for real financial decisions.

we reply under 24 hours.

free

session