Most banking chatbots still feel like talking to an IVR from 2005.

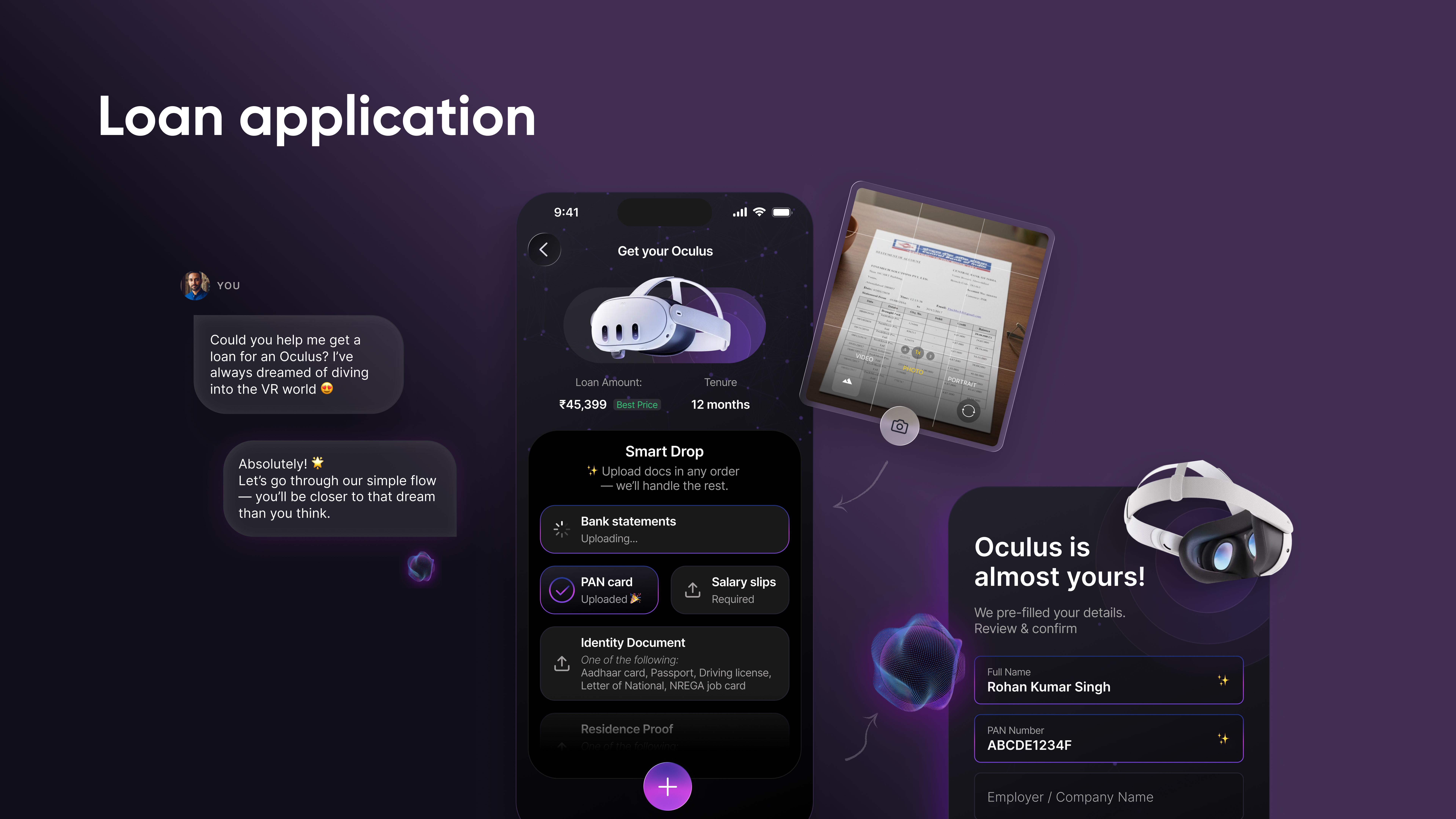

You ask a simple question, it offers a menu. You try voice, it speaks back like a voicemail. And when it’s time to actually compare offers or confirm a decision, the experience jumps into a form somewhere else.

In 2025, we took a different path. Our client, a consumer finance platform serving over 100M users, needed to understand something fundamental:

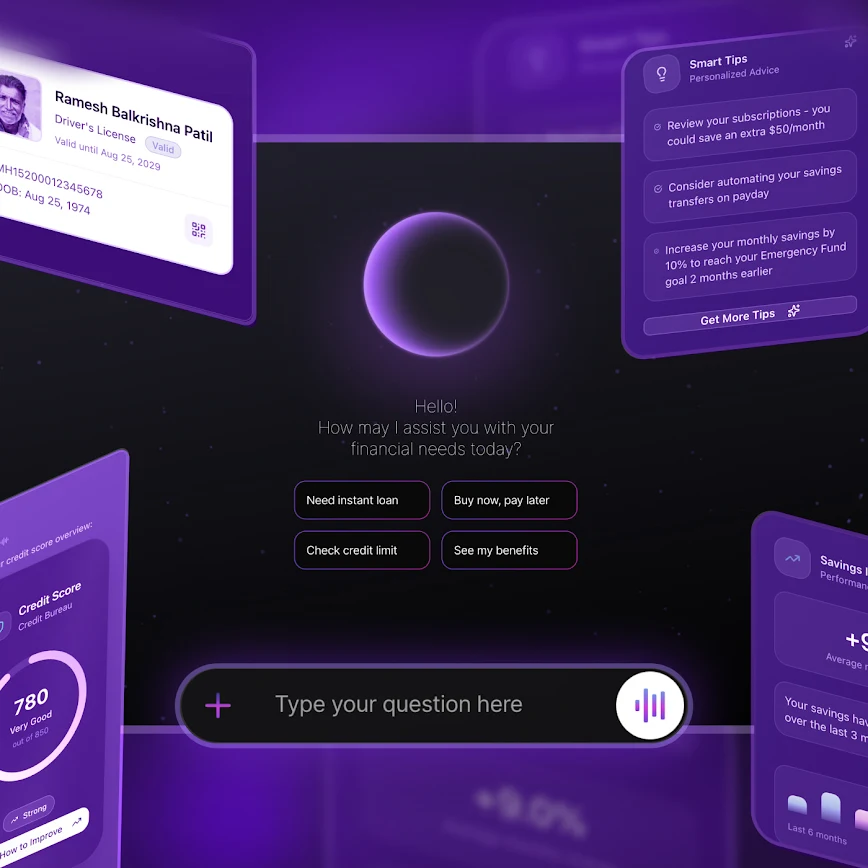

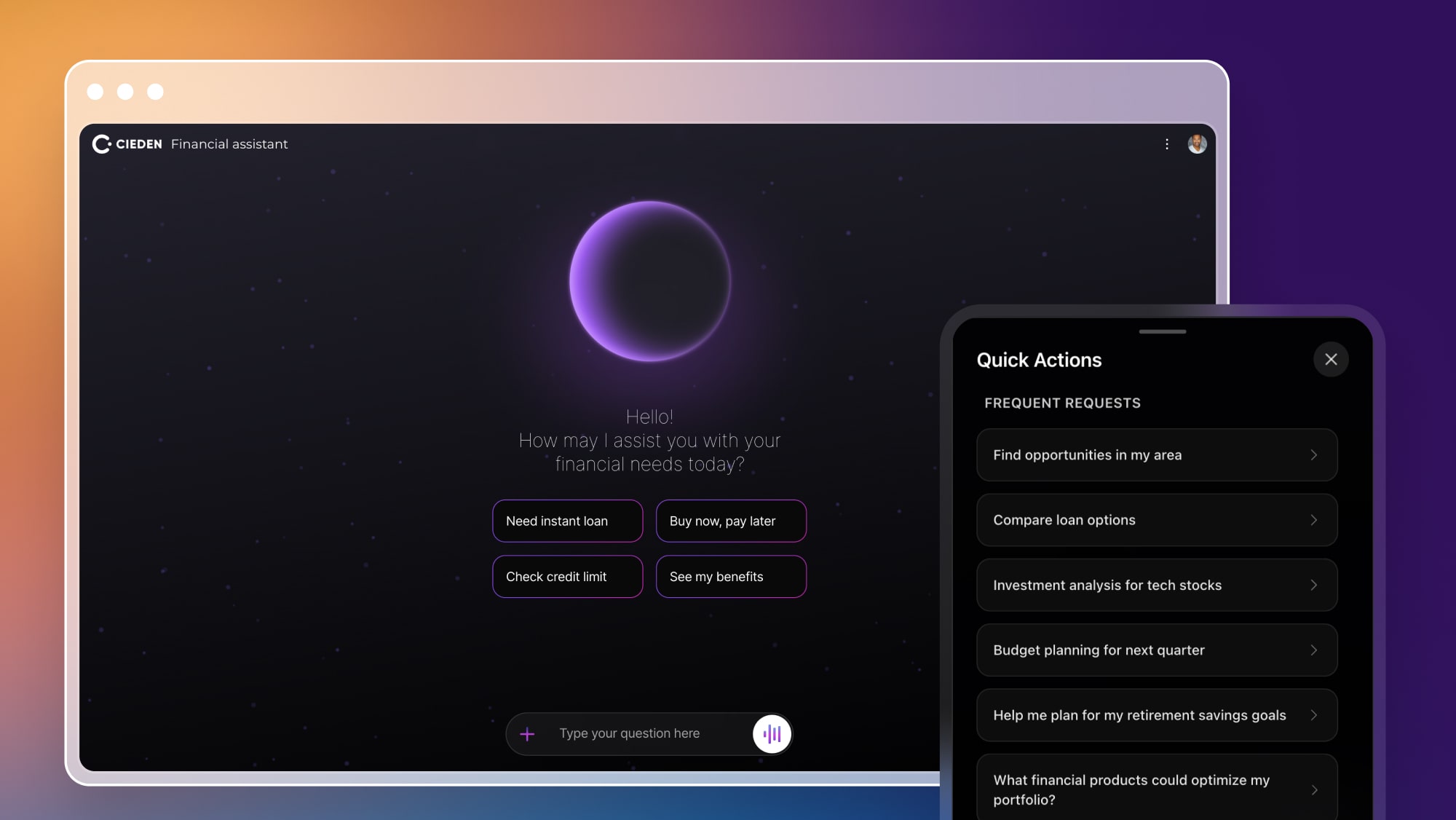

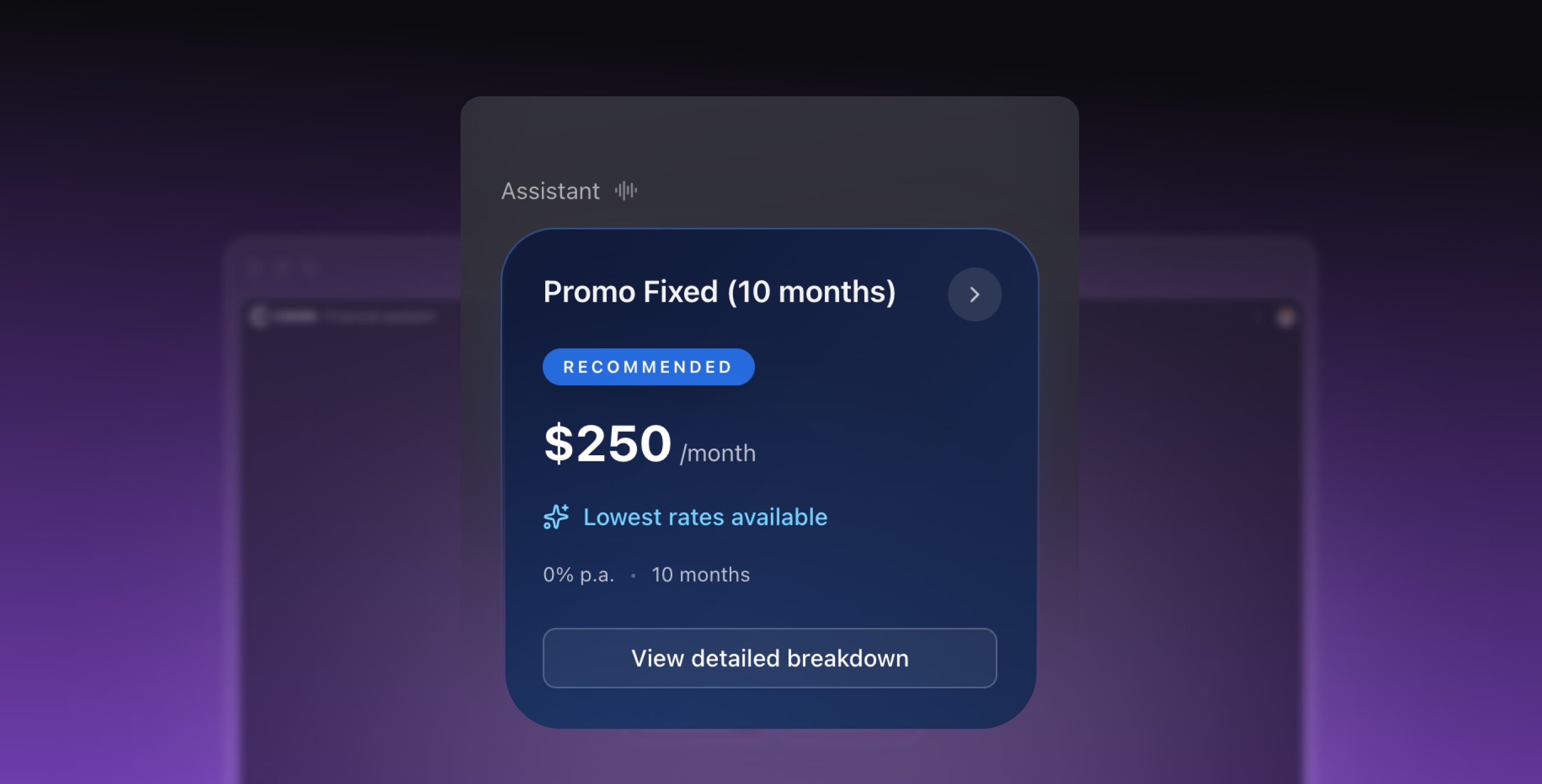

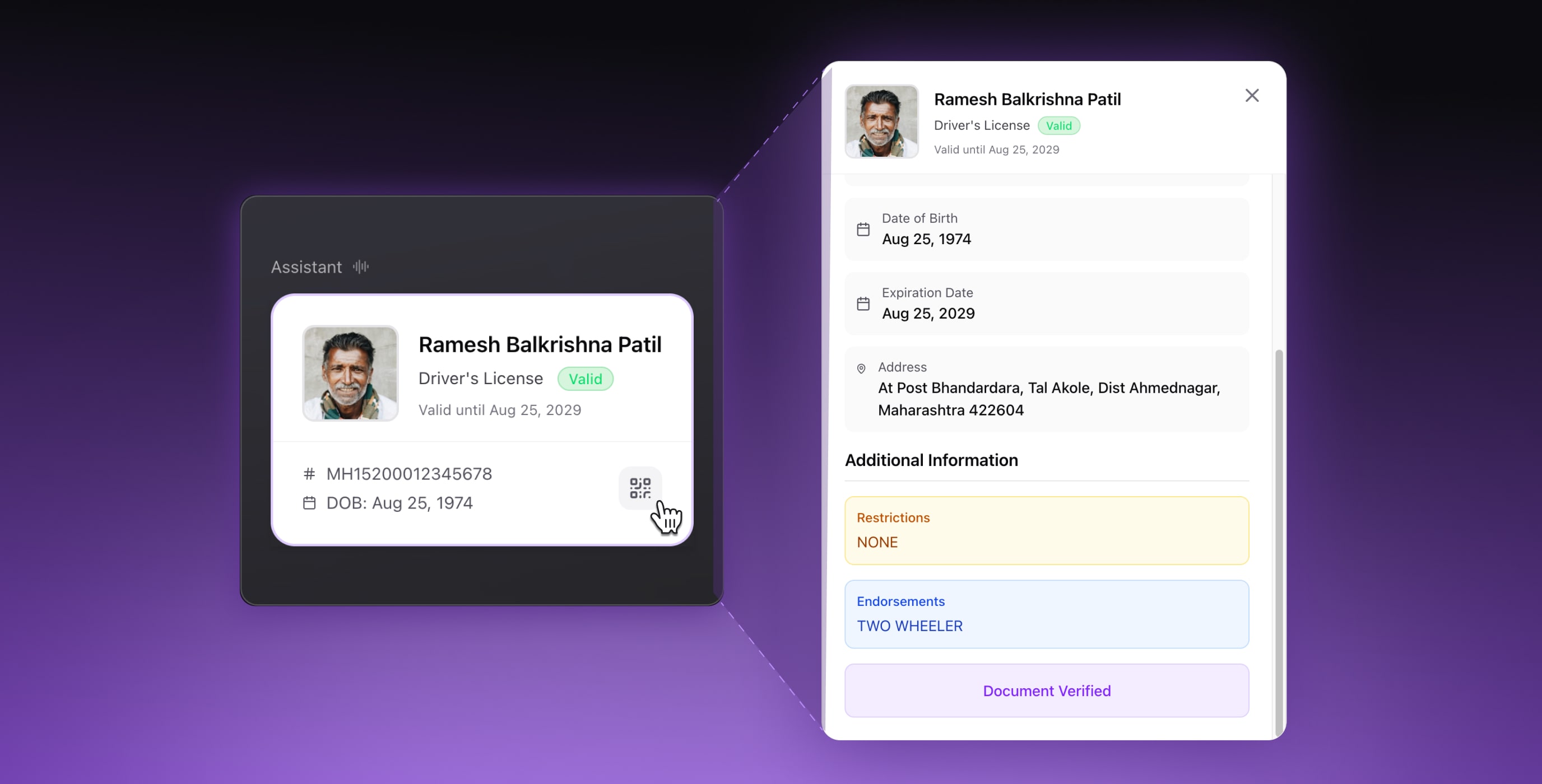

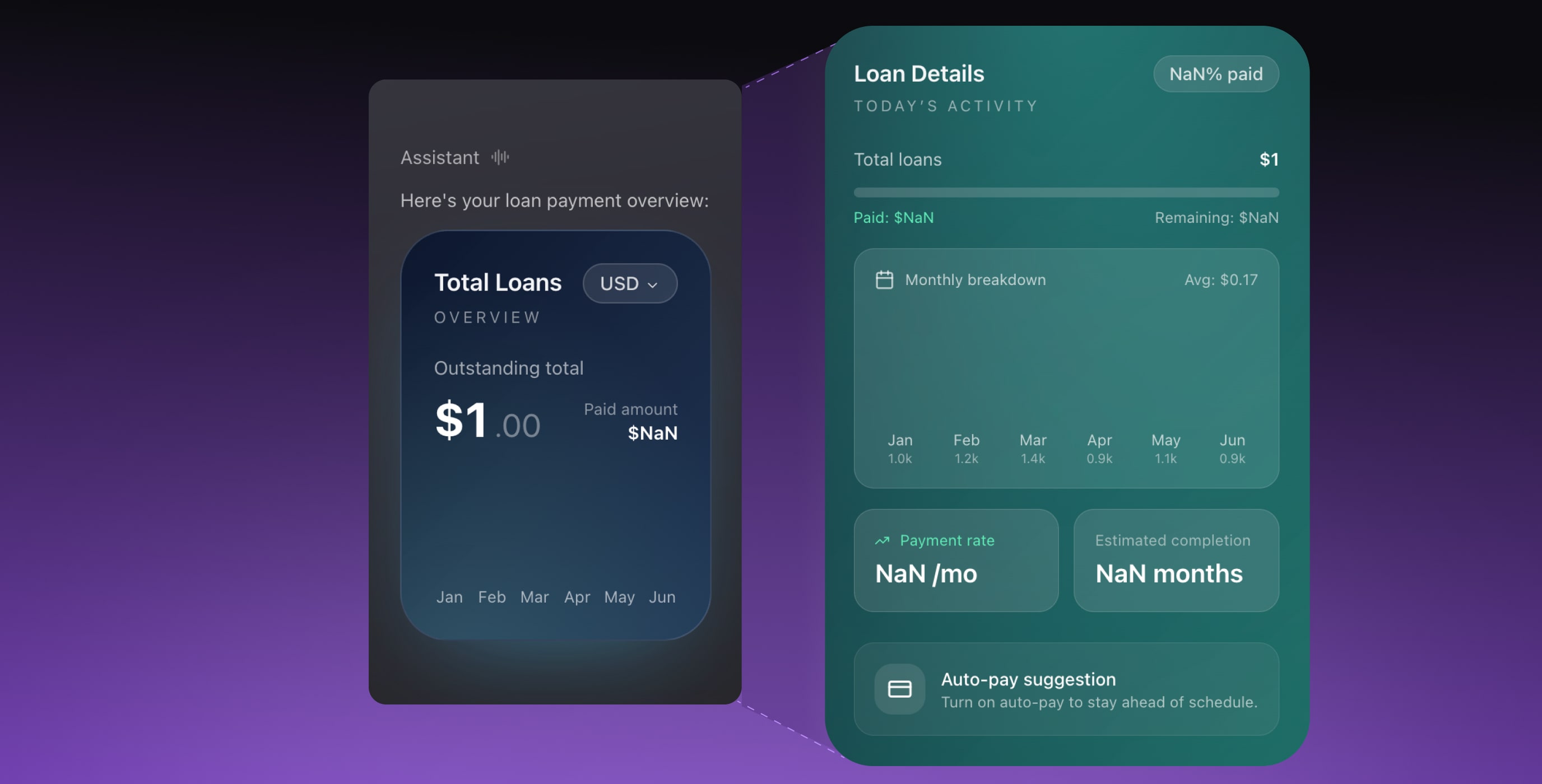

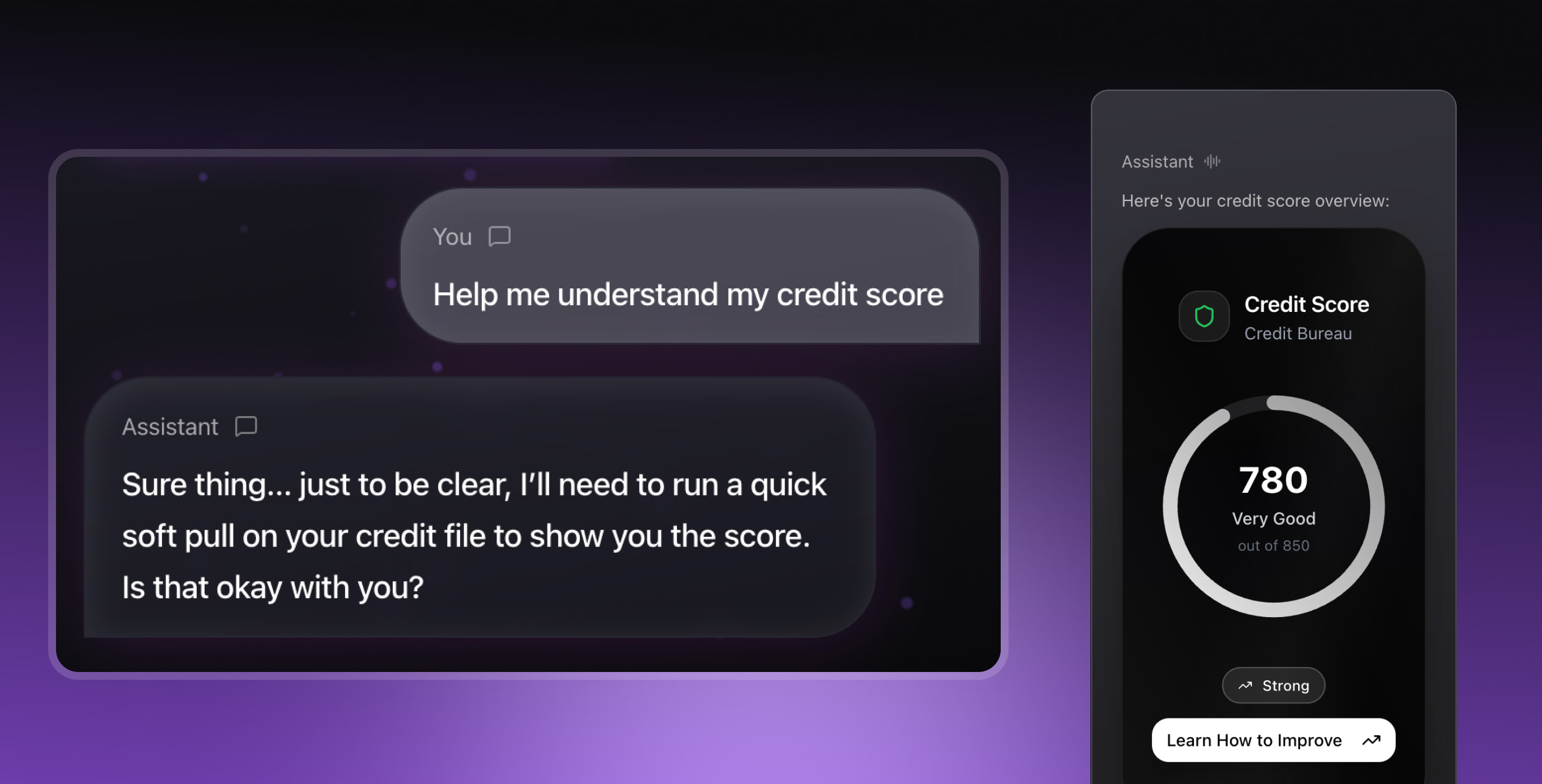

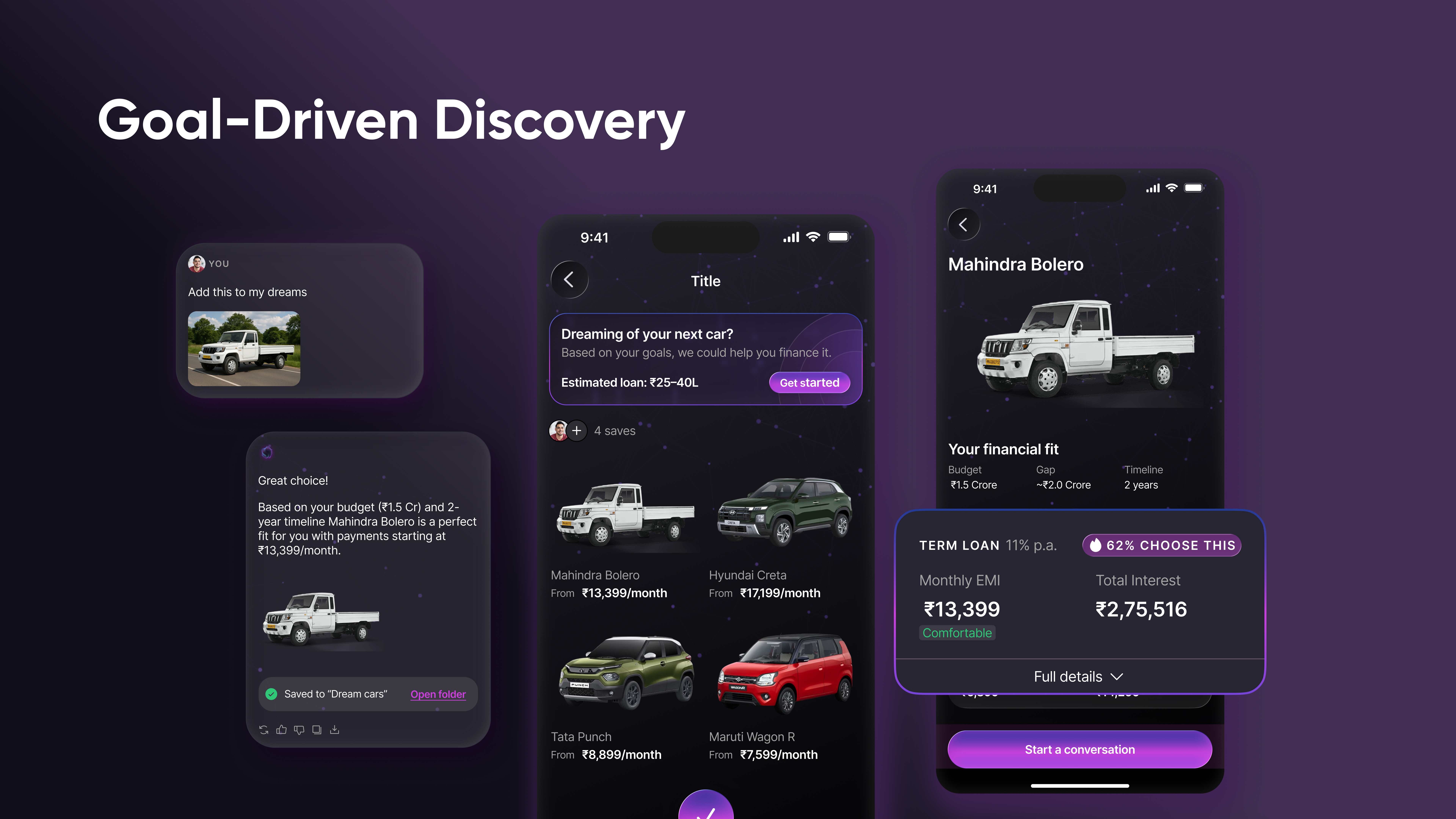

Could a person start a loan conversation by voice, review details visually, type when needed, and never feel like they switched modes?

Enter FinPilot: a prototype built to answer that question. The goal was to redefine conversational banking for real financial decisions, blending the speed of voice with the clarity that screens do best.

The hypothesis was simple:

-

voice is great for exploring (“What can I afford?”);

-

but people trust what they can see.

This article is the story of how we built that vision: what worked, what broke, and what multimodal banking looks like when it finally feels human.

Why conversational banking needs a screen (and why speed matters)

Banking is full of smart assistants that don’t really listen. Most voice or chat systems follow rigid scripts: a sequence of questions that only work in one order. If you interrupt or try to confirm visually, they fall apart.

This is what many AI assistants still resemble in practice: