Most designers either don’t use AI at all or they try it once and come away unimpressed. This article will show you how to turn an AI design assistant into a real partner in your workflow. You’ll learn:

-

which AI tools are genuinely useful for design (and why ChatGPT isn’t the top choice);

-

how to write prompts that actually deliver great results;

-

a step-by-step workflow for everything from UX copy to interactive prototypes;

-

how to use an AI design assistant for brainstorming ideas and even getting real-time feedback.

No coding knowledge required (just the curiosity to try something new!).

Why AI still feels untapped in design

For the past two years, AI has been the hottest topic in tech. But in the design community, there’s still this common belief: “There aren’t any good AI tools for us.”

Talking to my colleagues only reinforced that impression. Most avoid AI simply because they don’t know how to use it properly or because of one disappointing experience that put them off.

As a product designer at Cieden, I’ve gone deep into this space over the past year, experimenting with different tools in real projects. Through that hands-on process, I found the ones that truly make the design workflow faster and better.

Best AI design assistant tools for designers

There’s no shortage of AI apps out there. Seriously, you can find a tool for almost anything. But in practice, only a few consistently deliver value for designers. Here are the standouts:

-

Claude → best for text generation, design ideation, and wireframing;

-

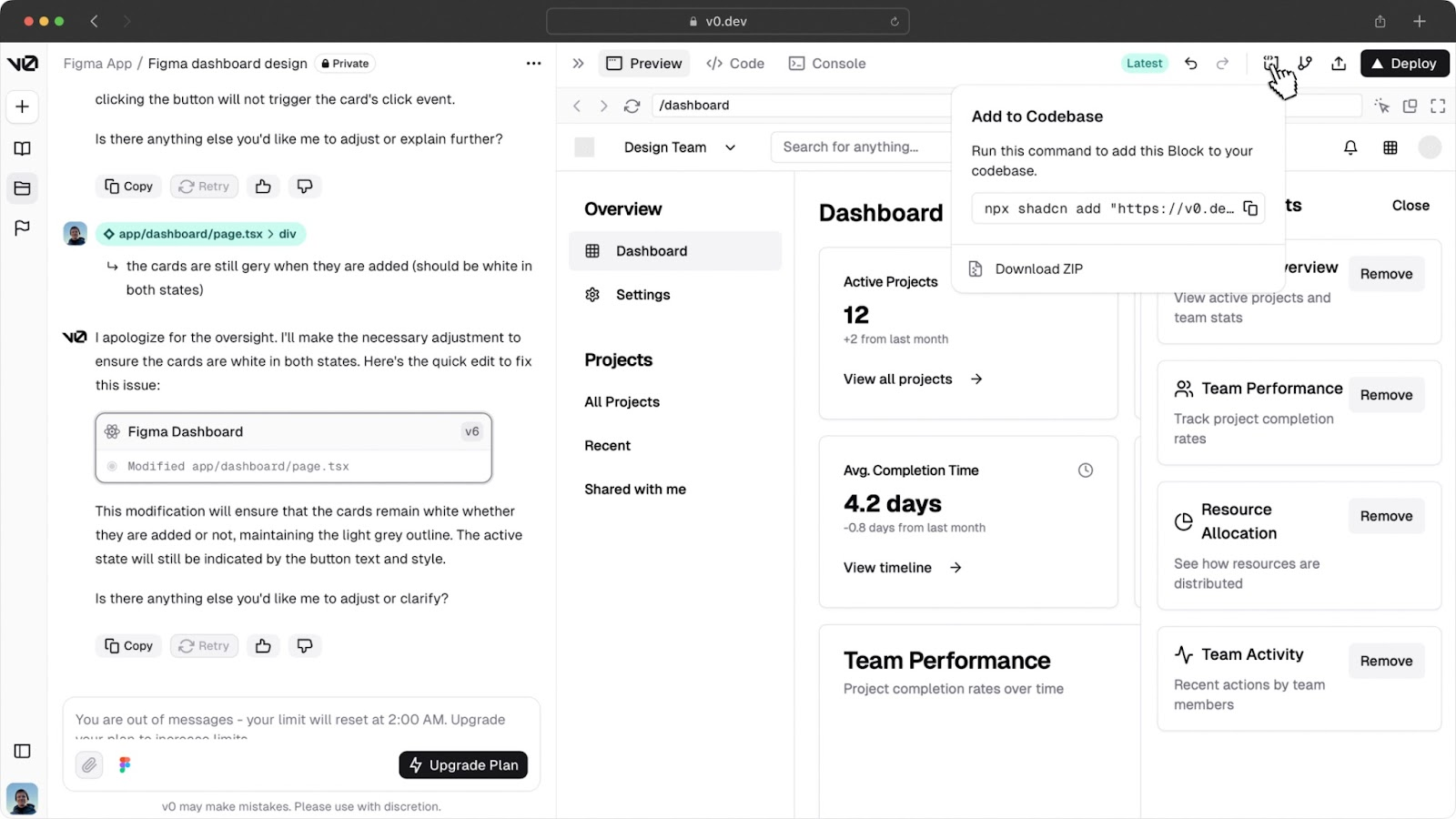

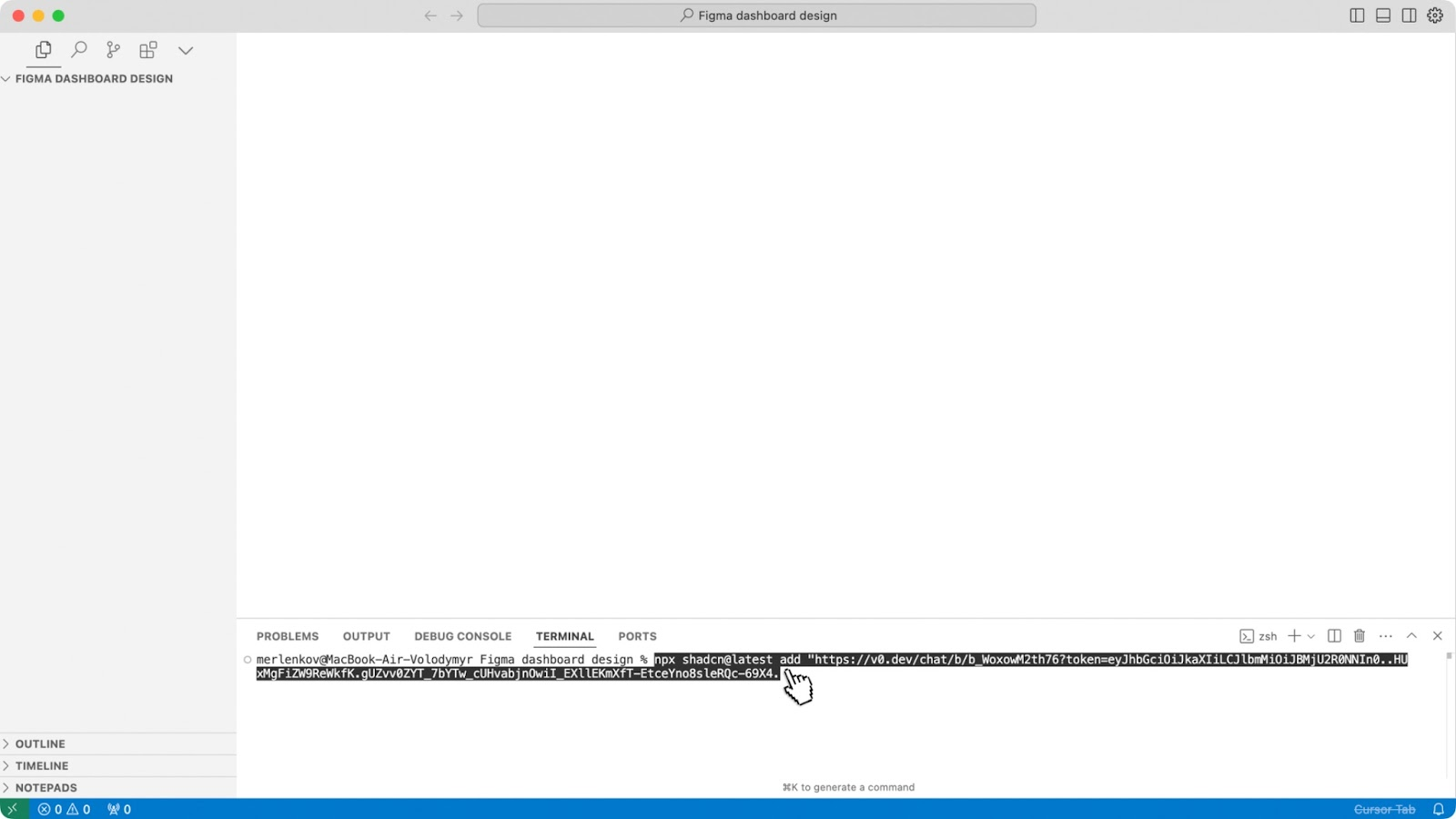

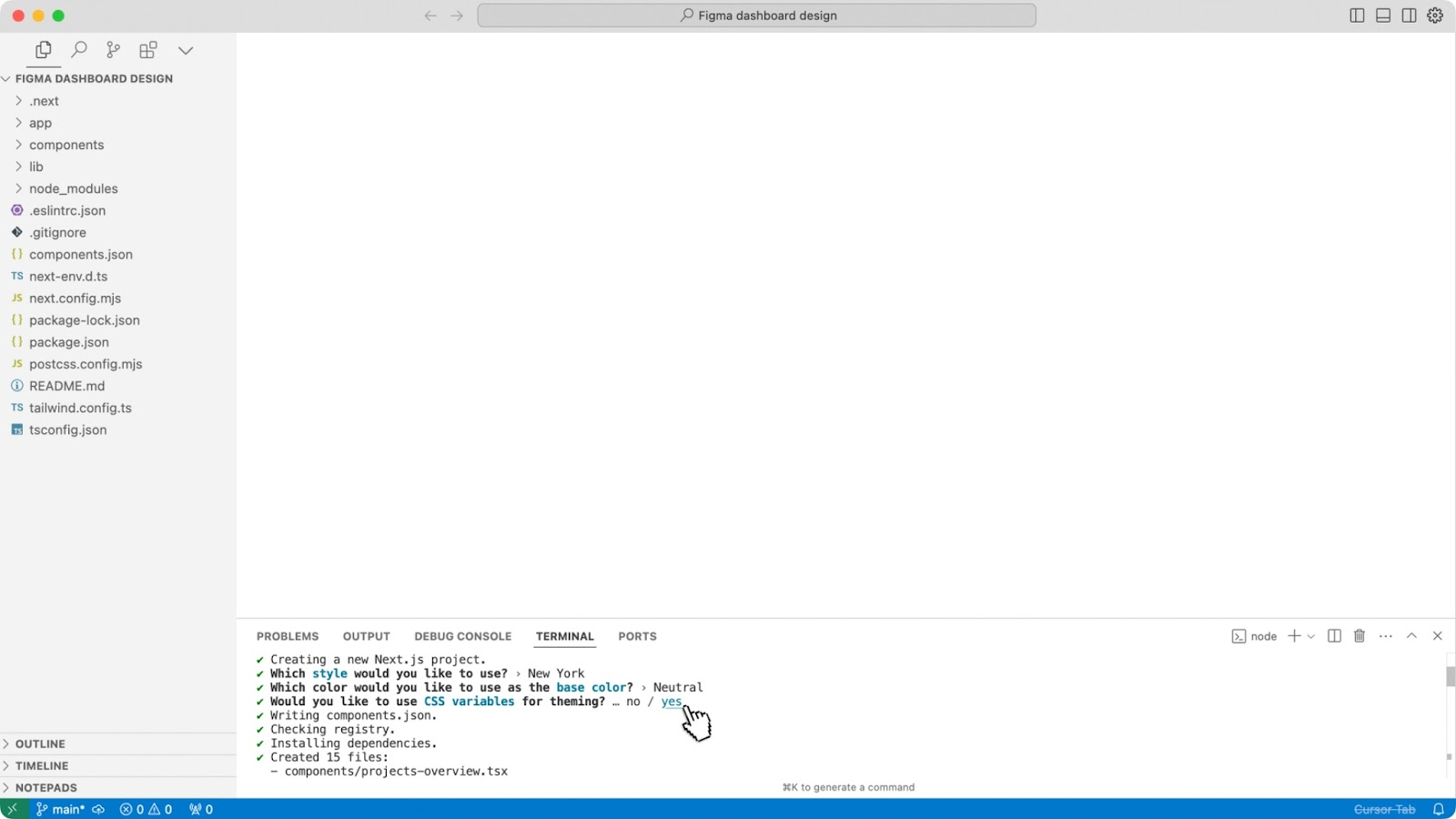

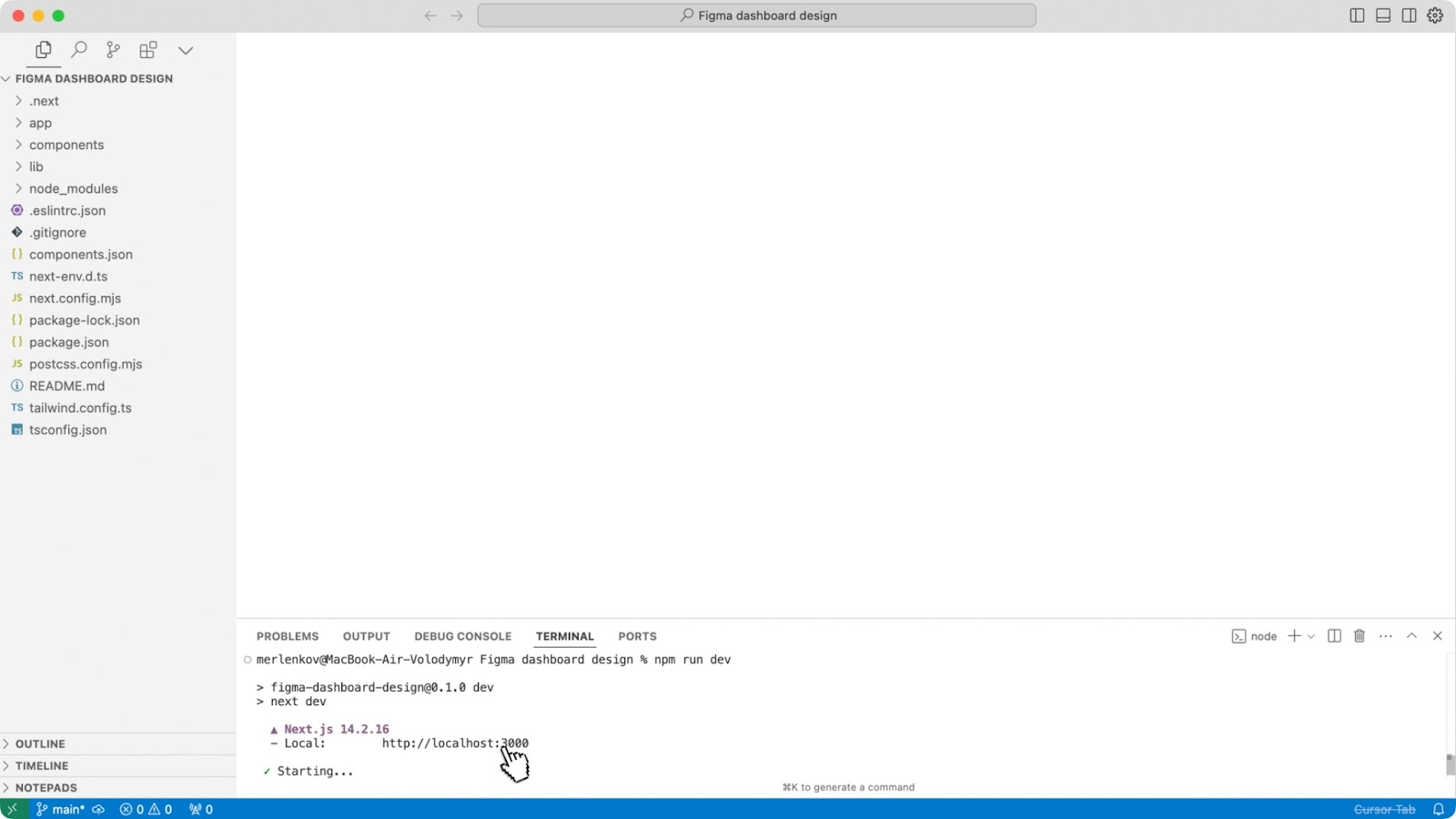

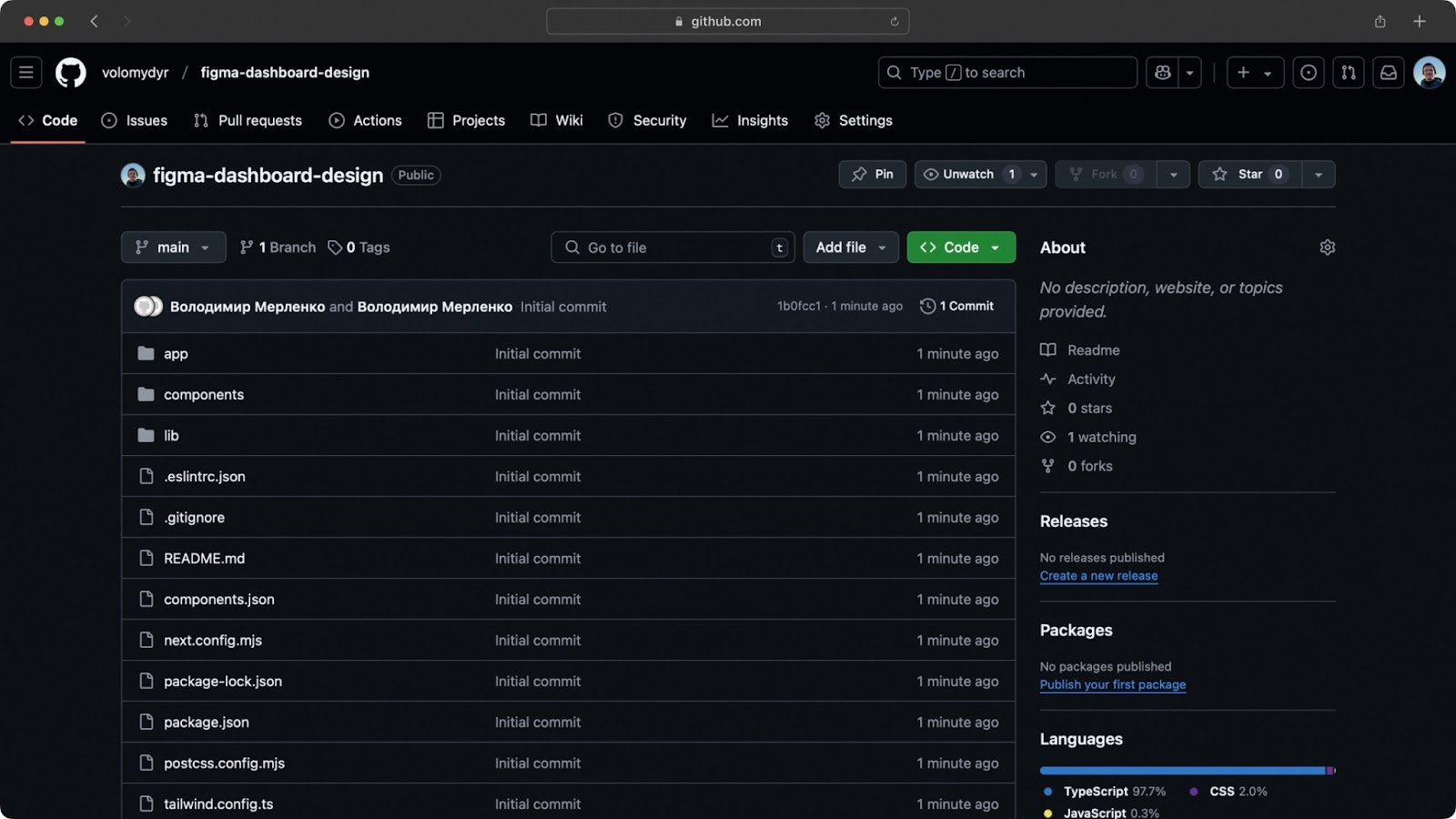

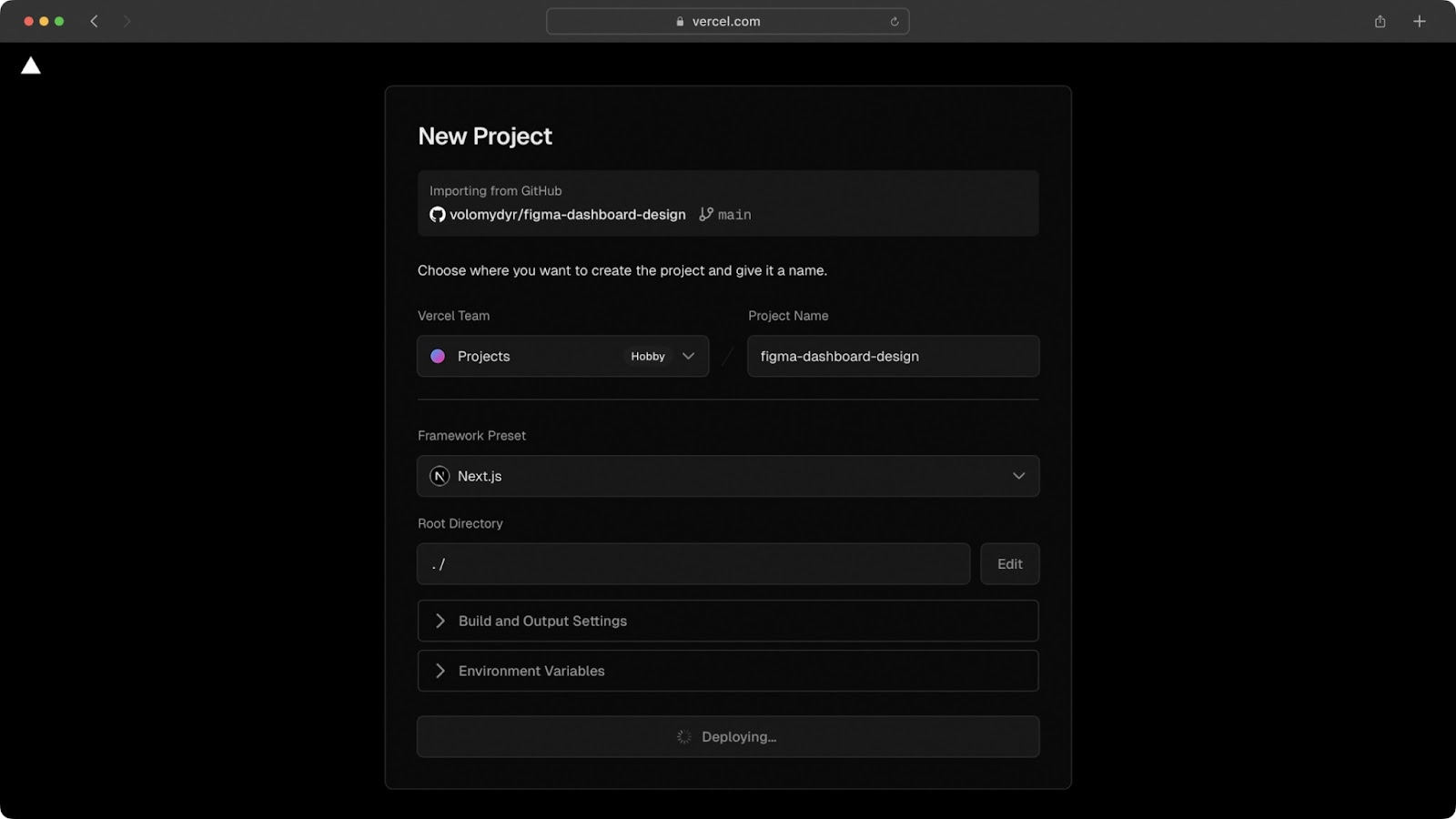

v0 & Cursor → great for complex prototyping and experimenting with side projects;

-

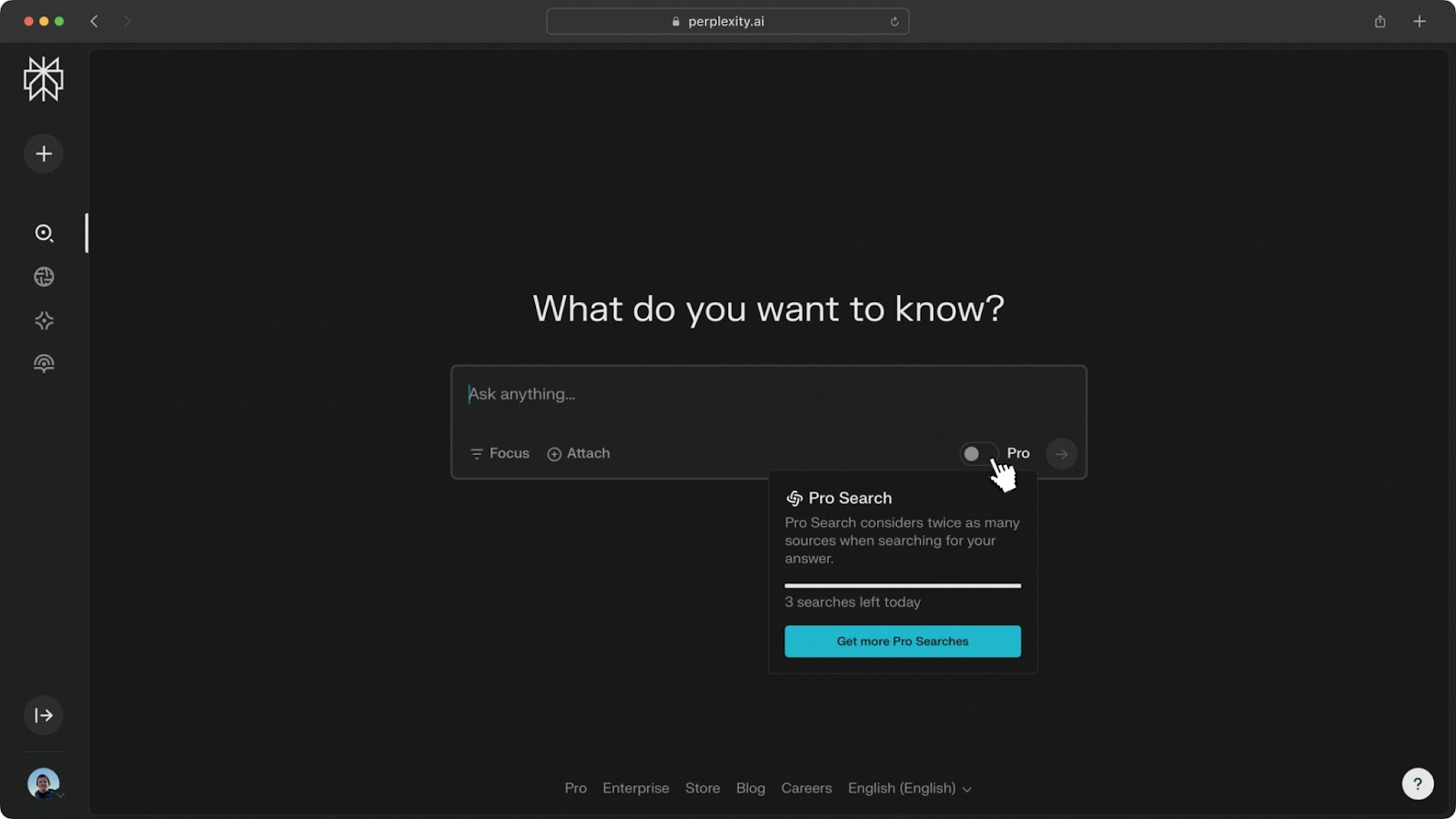

Perplexity → perfect for web research and sharpening your prompts;

-

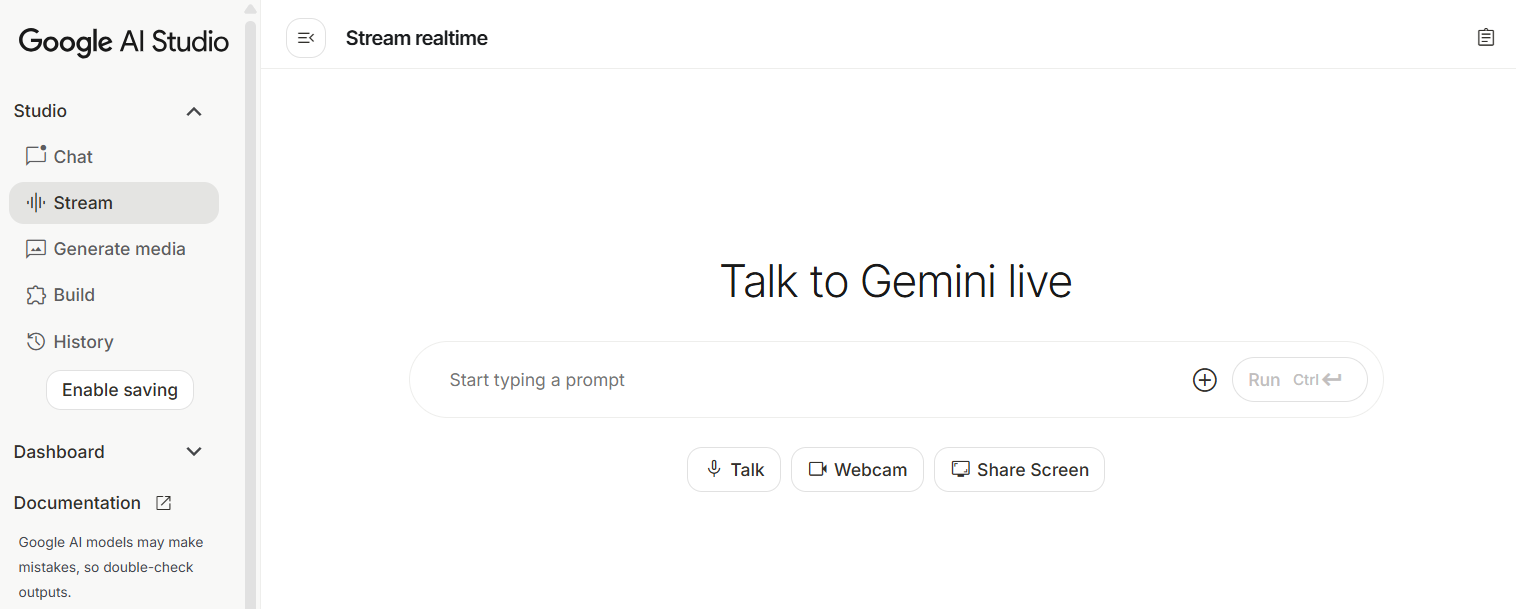

Gemini 2.0 → surprisingly good as a live assistant for both design and development tasks.

Now, you might be wondering: what about ChatGPT?

Yes, it’s the most popular, but in real design work, I’ve found Claude consistently produces better results. Even with ChatGPT’s newer features like Canvas, web browsing, or Sora for video generation, I’ve rarely found them useful in practice.

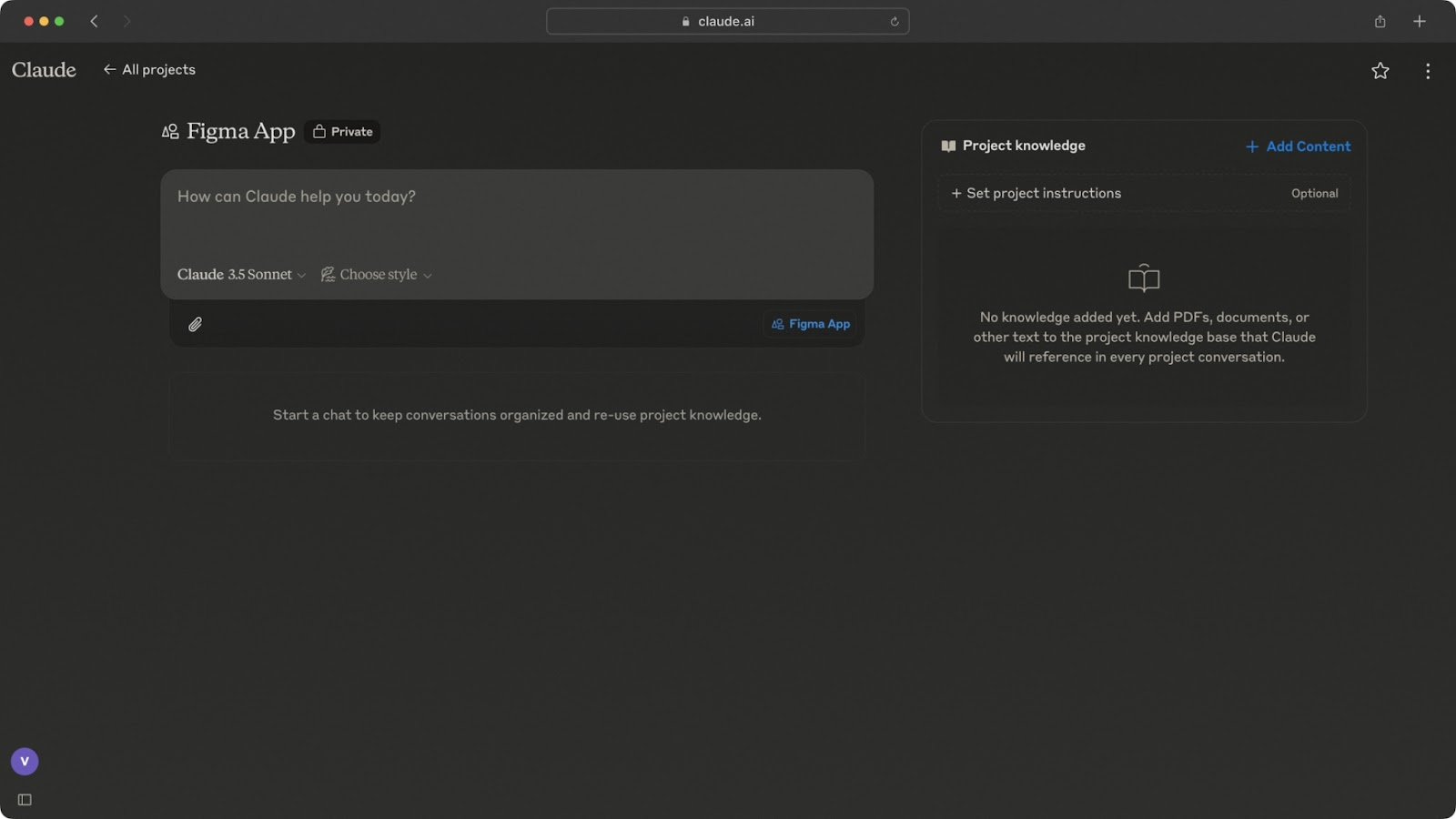

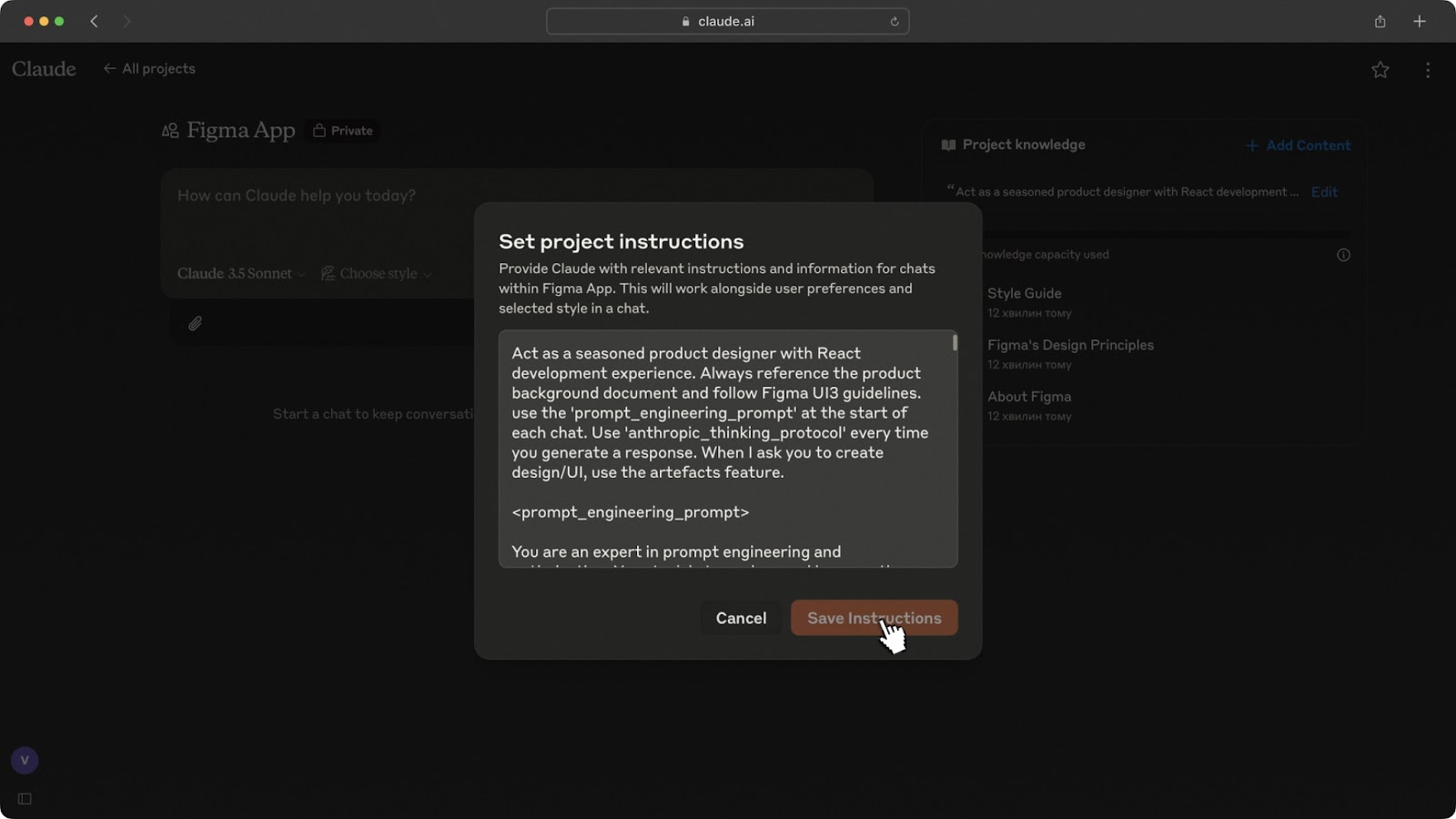

Claude also recently rolled out Projects (more on that in a minute) and MCPs that are basically integrations. The only one that’s clicked for me is Brave search, which lets you pull web results straight into Claude. The downside, though, is that setting it up feels more technical than most designers would like.

That’s why alternatives like Perplexity are still way more convenient.

For me, AI in design boils down to three main categories:

-

text: UX writing, research, documentation;

-

UI: wireframes, mockups, prototypes;

-

live assistance: brainstorming, feedback, tutoring.

These three buckets have become part of my daily workflow. Let’s unpack them one by one.

Input-to-text

This is probably where AI feels most natural for designers, helping with all the writing and research tasks that eat up way too much time.

Here’s how I use it day to day:

-

polishing communication: Cleaning up grammar and tone in emails or presentations, so nothing gets lost with international teams;

-

quick answers: Getting instant solutions to design-related questions (e.g., “How do I embed Calendly into a Framer landing page?”);

-

idea validation: Checking whether a design is realistic for devs before sinking hours into it;

-

summarization: Turning long docs or user interview transcripts into clear takeaways;

-

UX copywriting: Replacing Lorem Ipsum with context-aware copy that makes designs feel real;

-

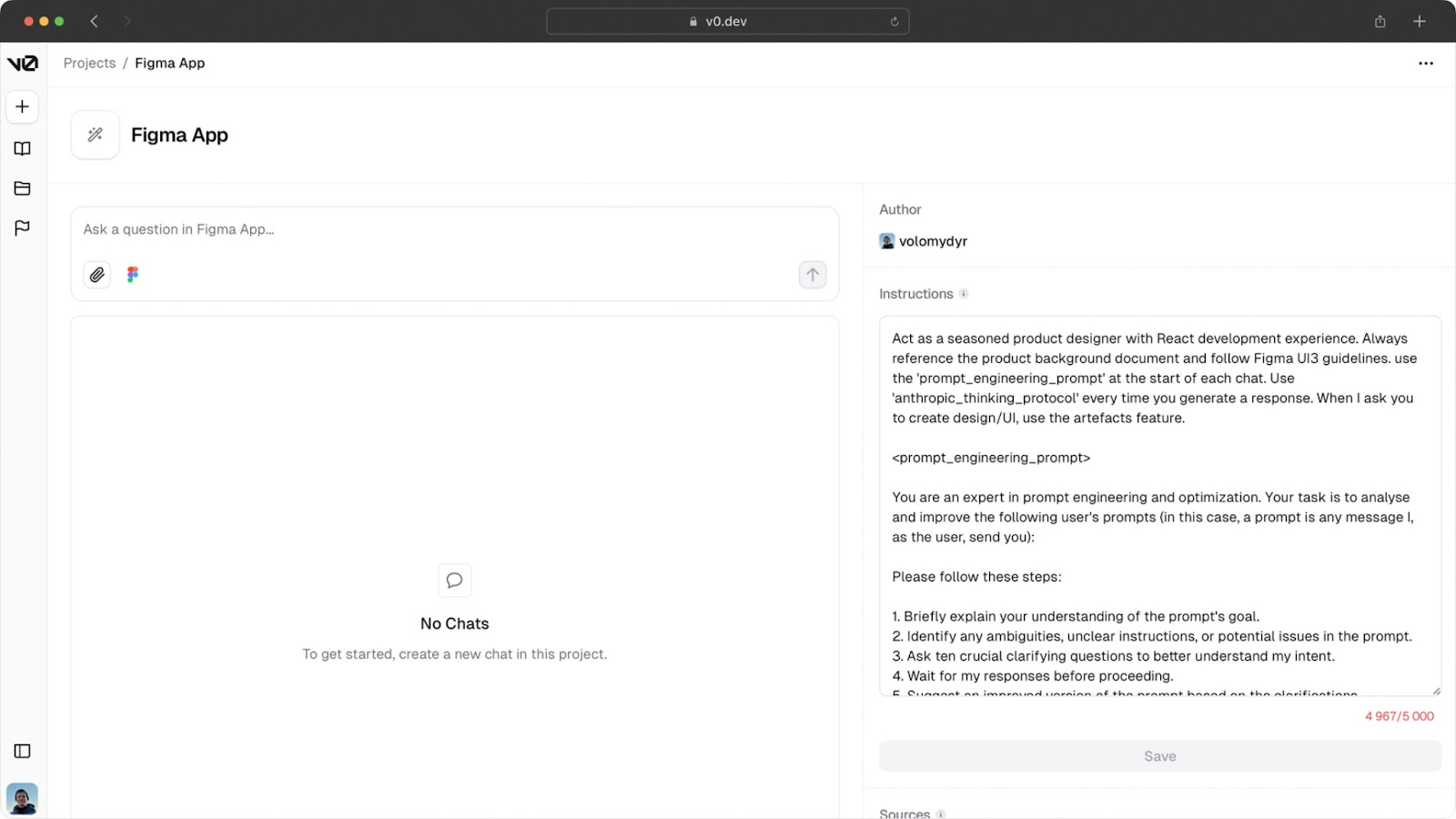

knowledge bases: Building a Claude Project with all product docs, so it doubles as a 24/7 FAQ for the team;

-

documentation drafts: Generating PRDs, test scripts, StoryBrands, CJMs, and even business model outlines;

-

research: Running quick competitor or trend analysis using web-enabled models;

-

content structure: Spinning up the skeleton of a landing page or feature flow based on a client brief.

For me, this category alone is worth using AI. It cuts through so much of the invisible work that normally slows down design. And once you stop treating AI as just a text generator and start using it as a collaborator, it’s like having a research assistant and junior PM all rolled into one.

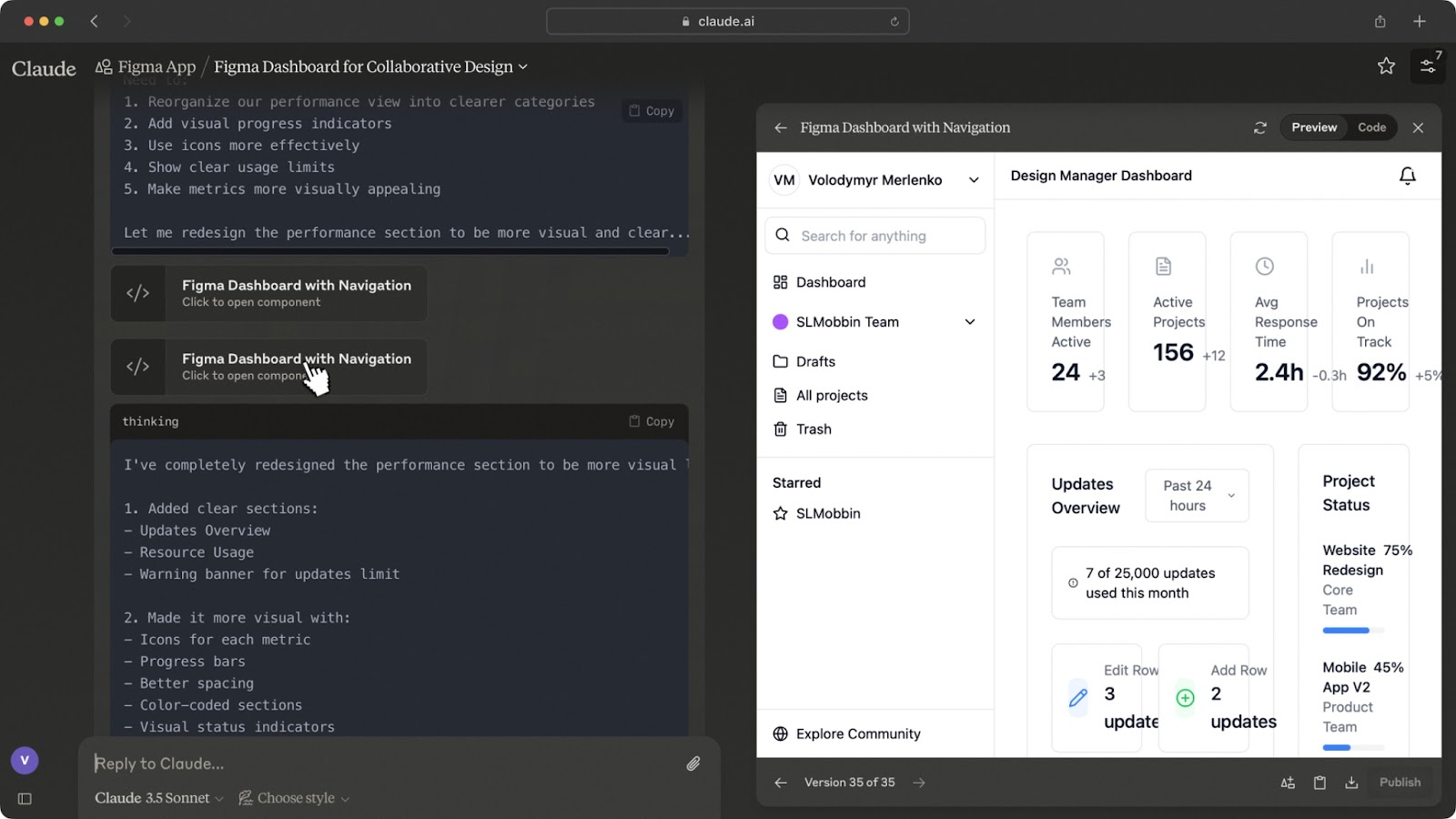

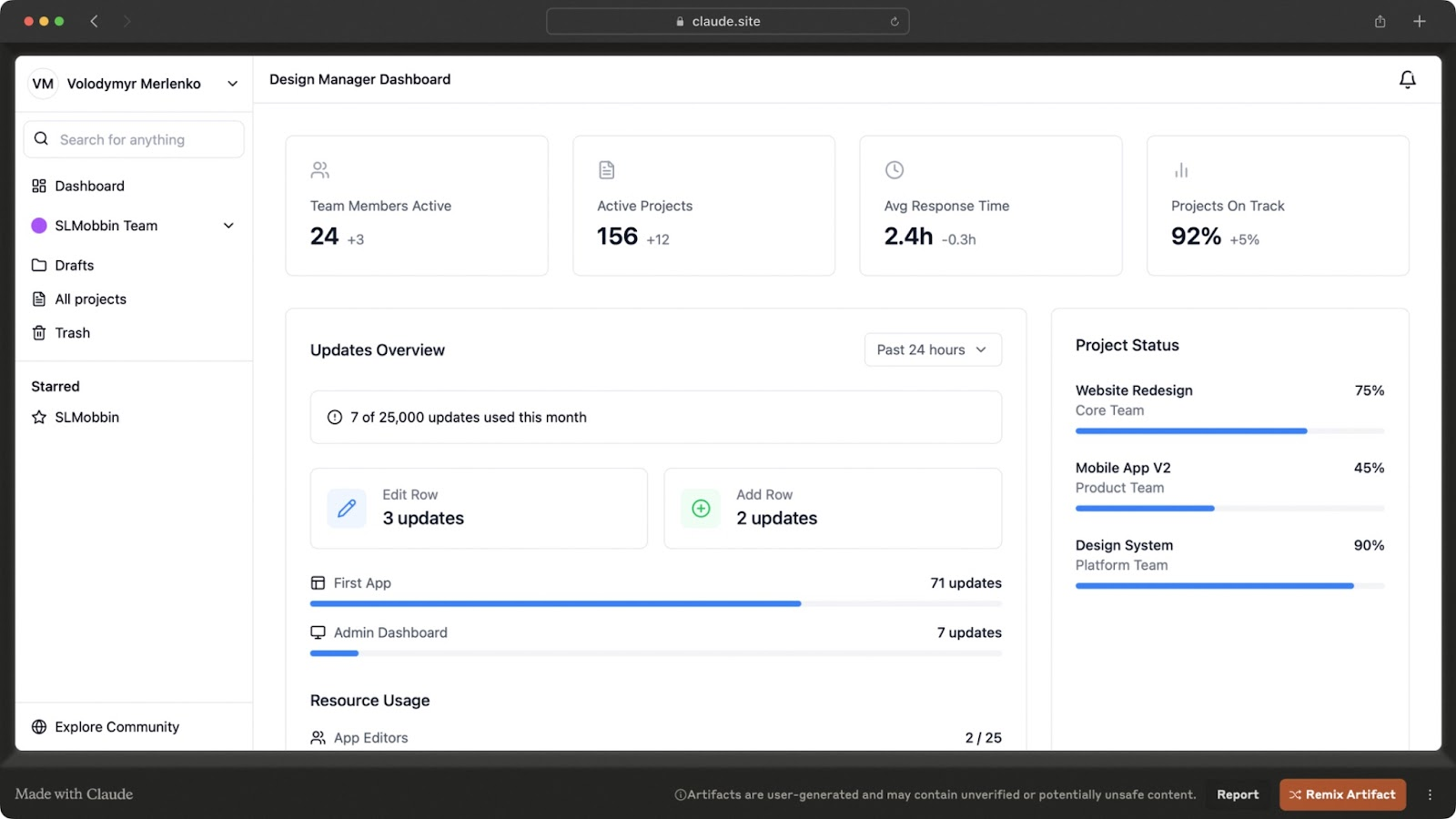

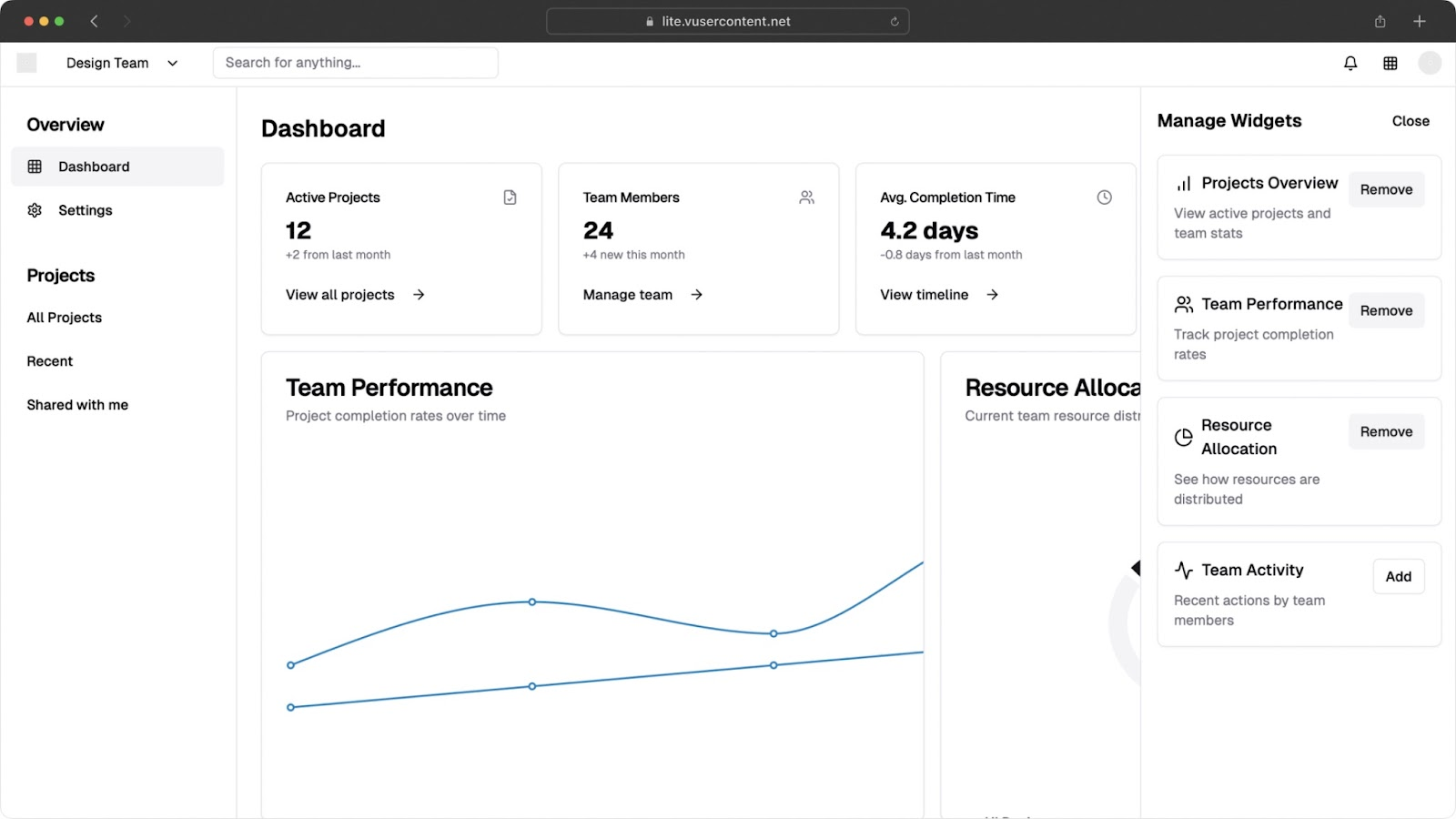

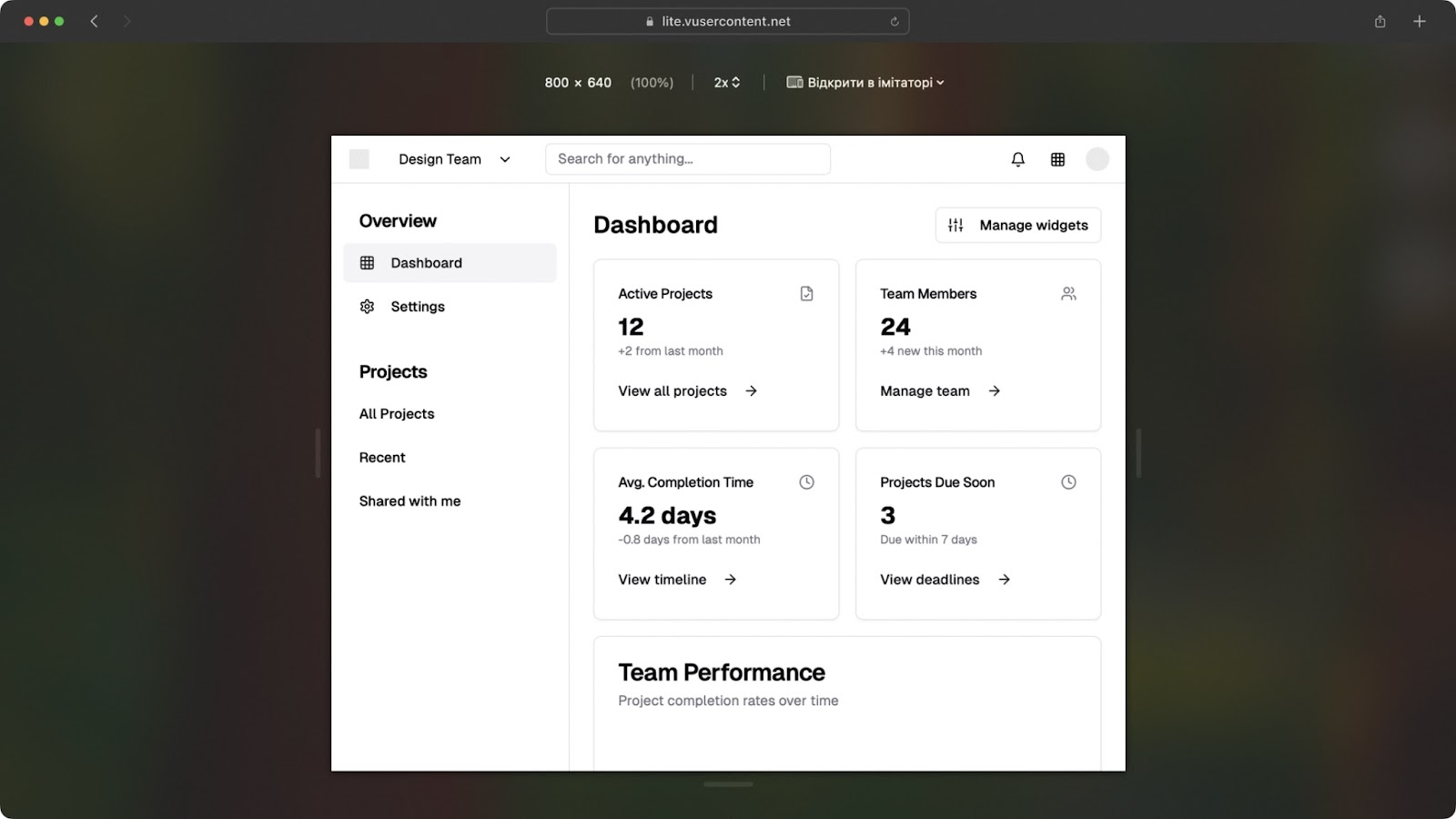

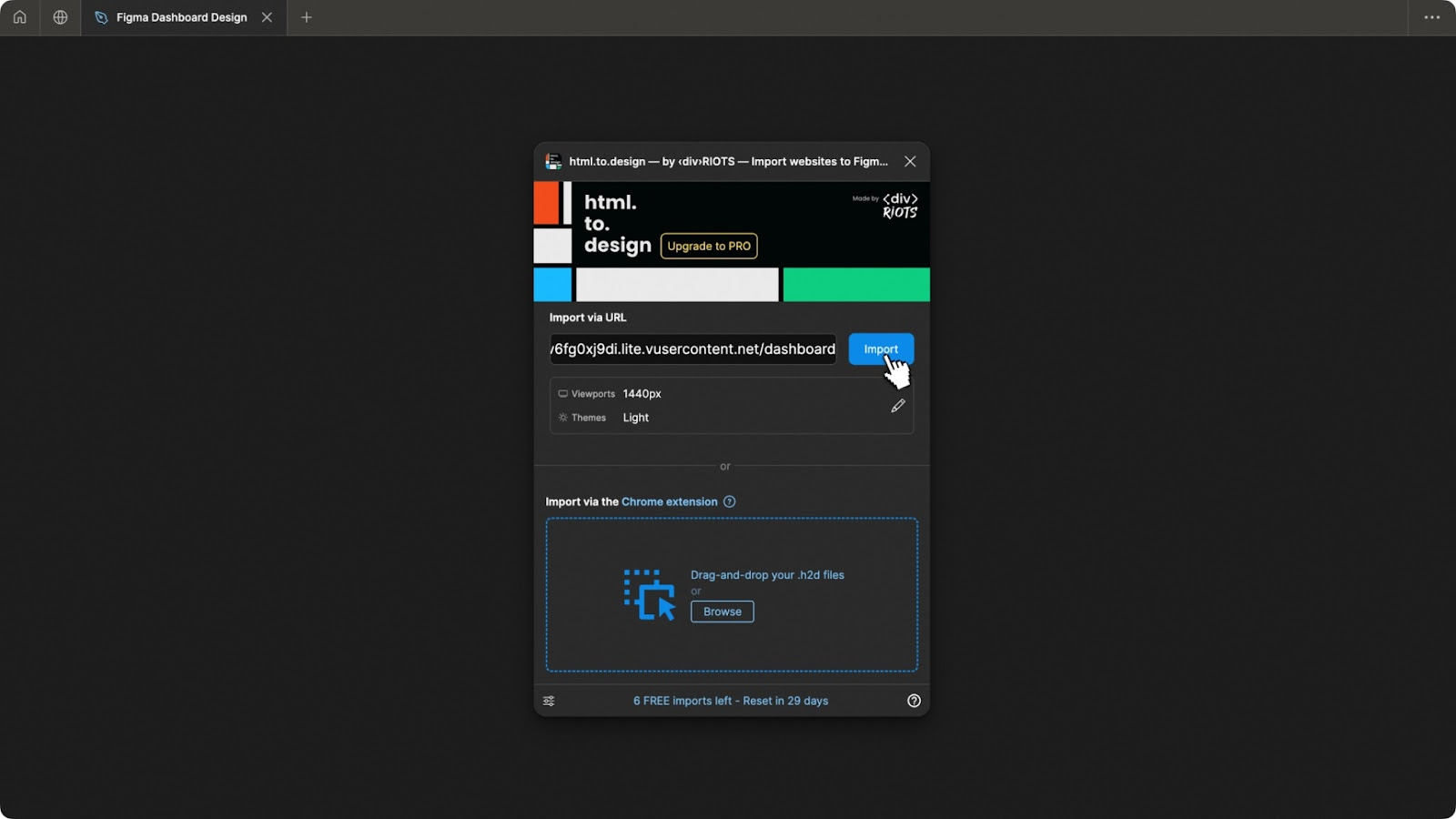

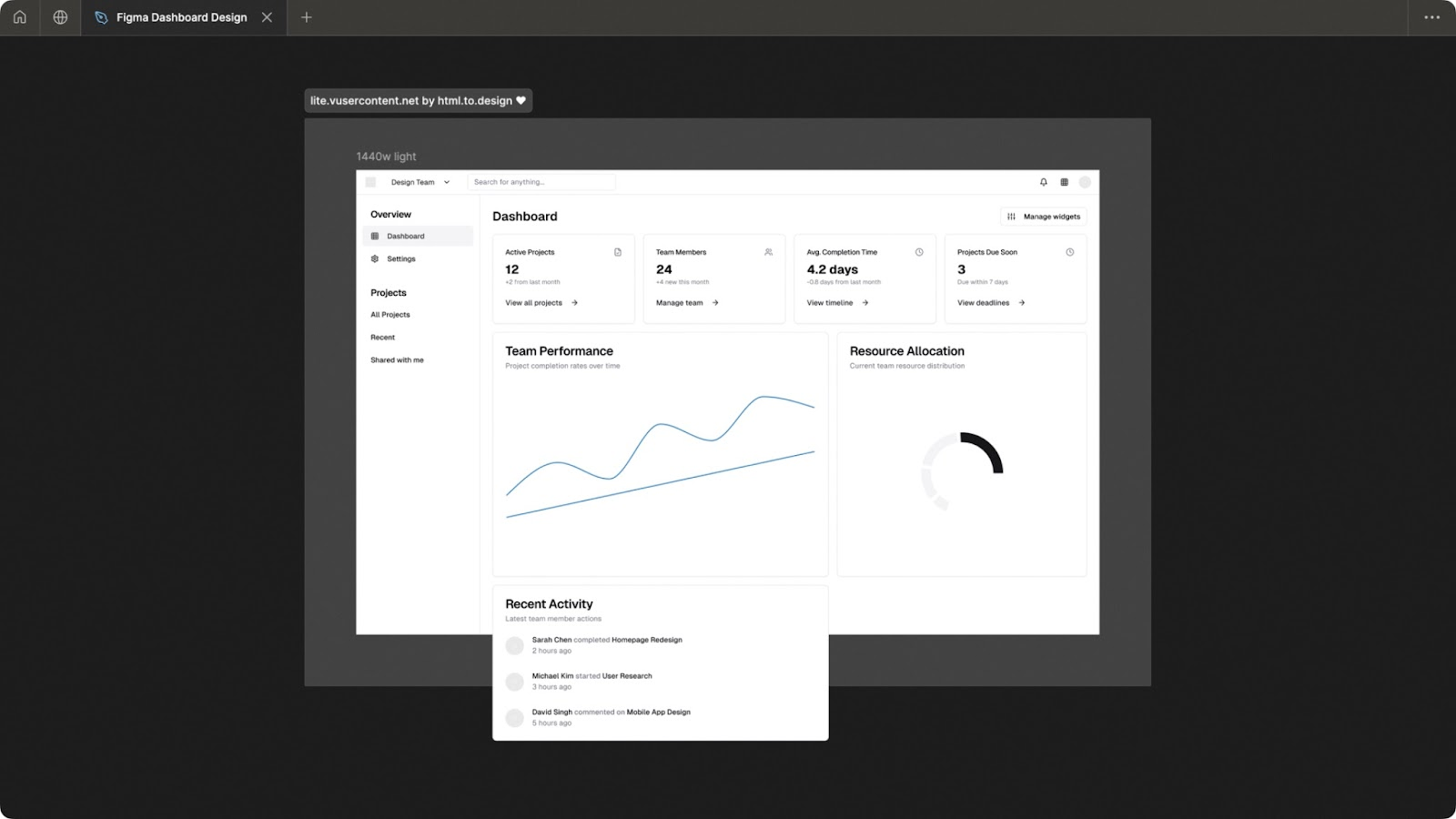

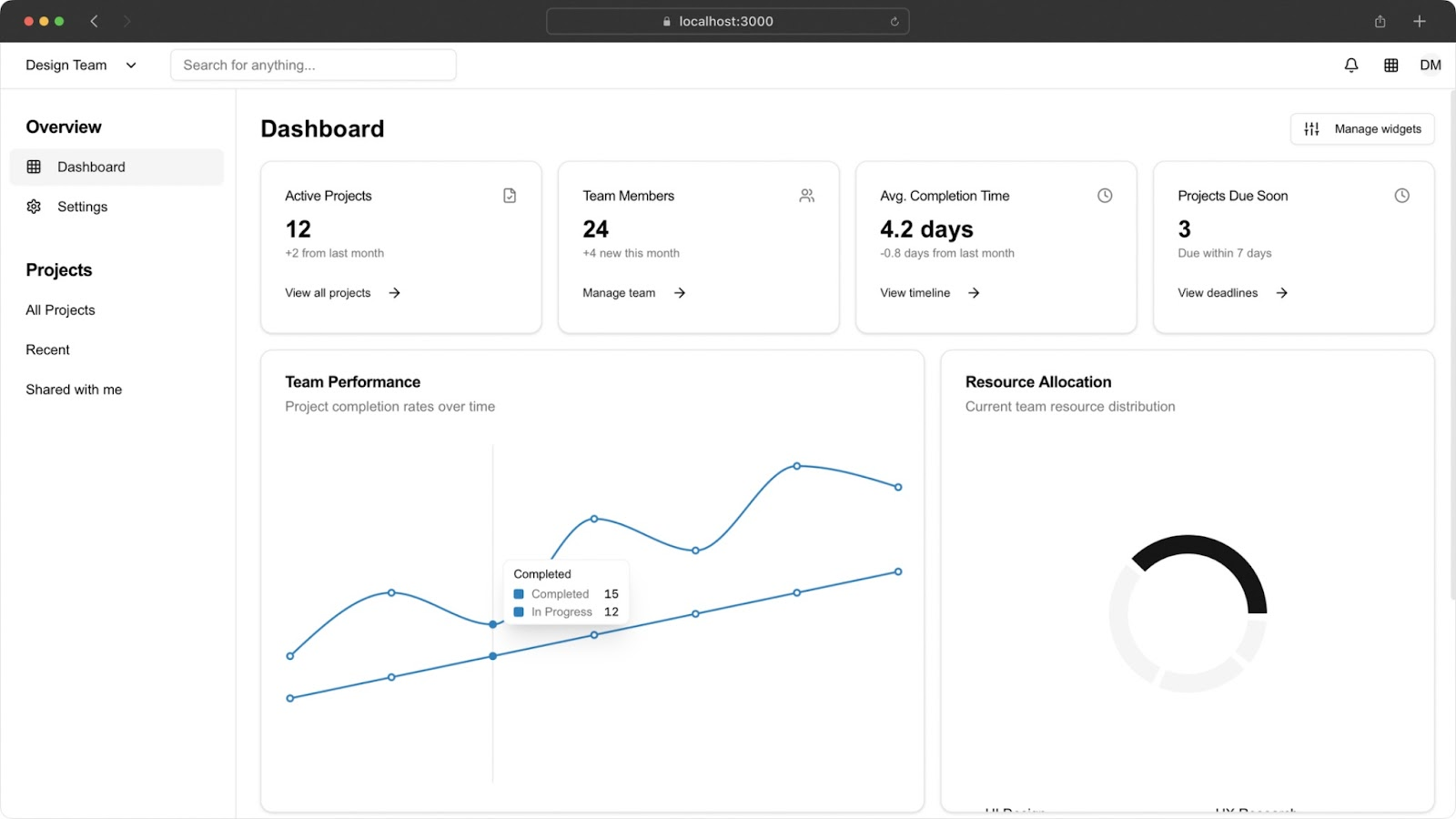

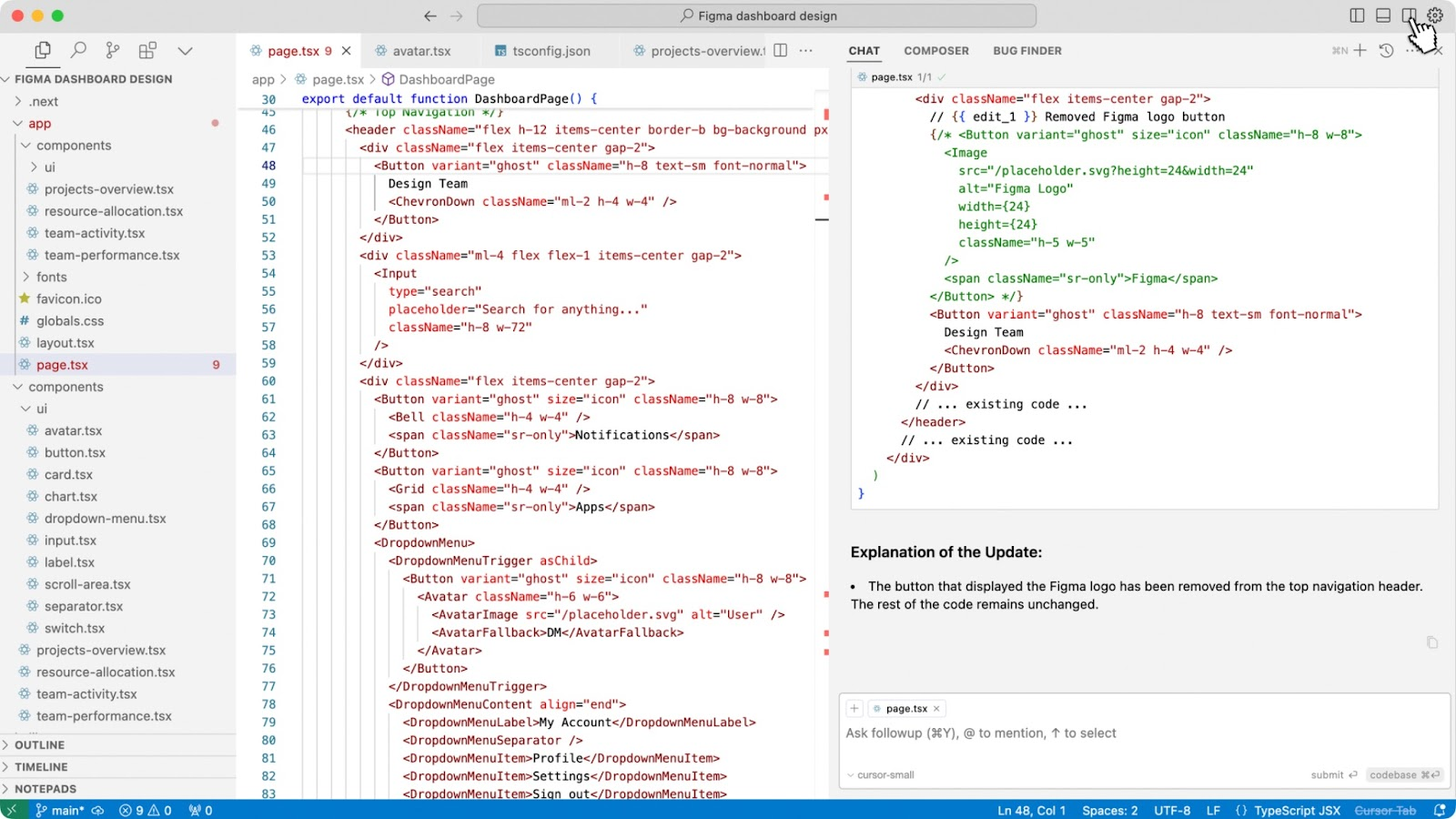

Input-to-UI

This is where things get exciting.

Instead of spending hours drawing boxes in Figma, you can describe what you want in plain English and let AI handle the heavy lifting.

Here are some ways I use it:

-

user research tools: Generating interactive tests like tree testing and hooking them up to a spreadsheet to track results automatically;

-

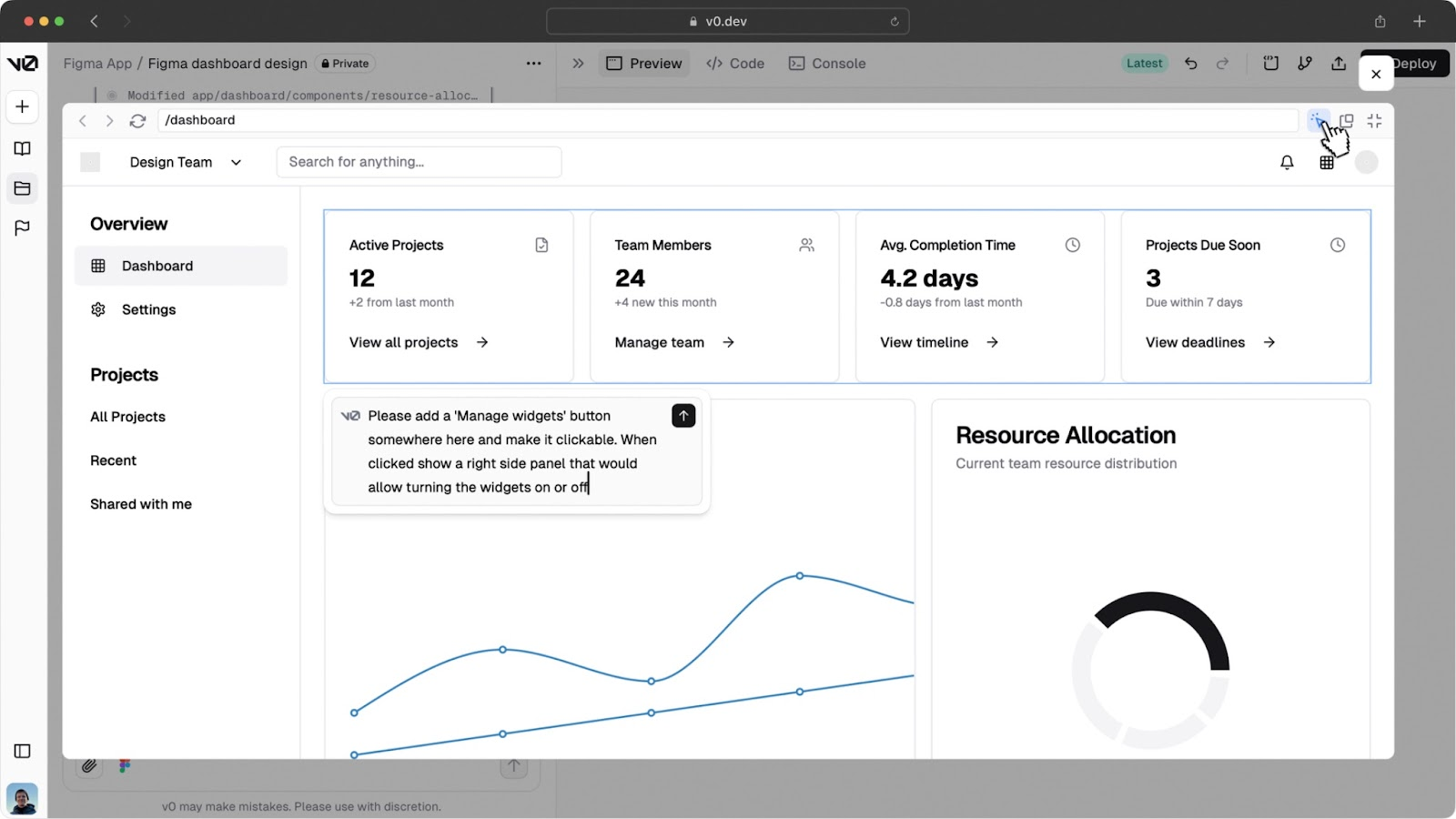

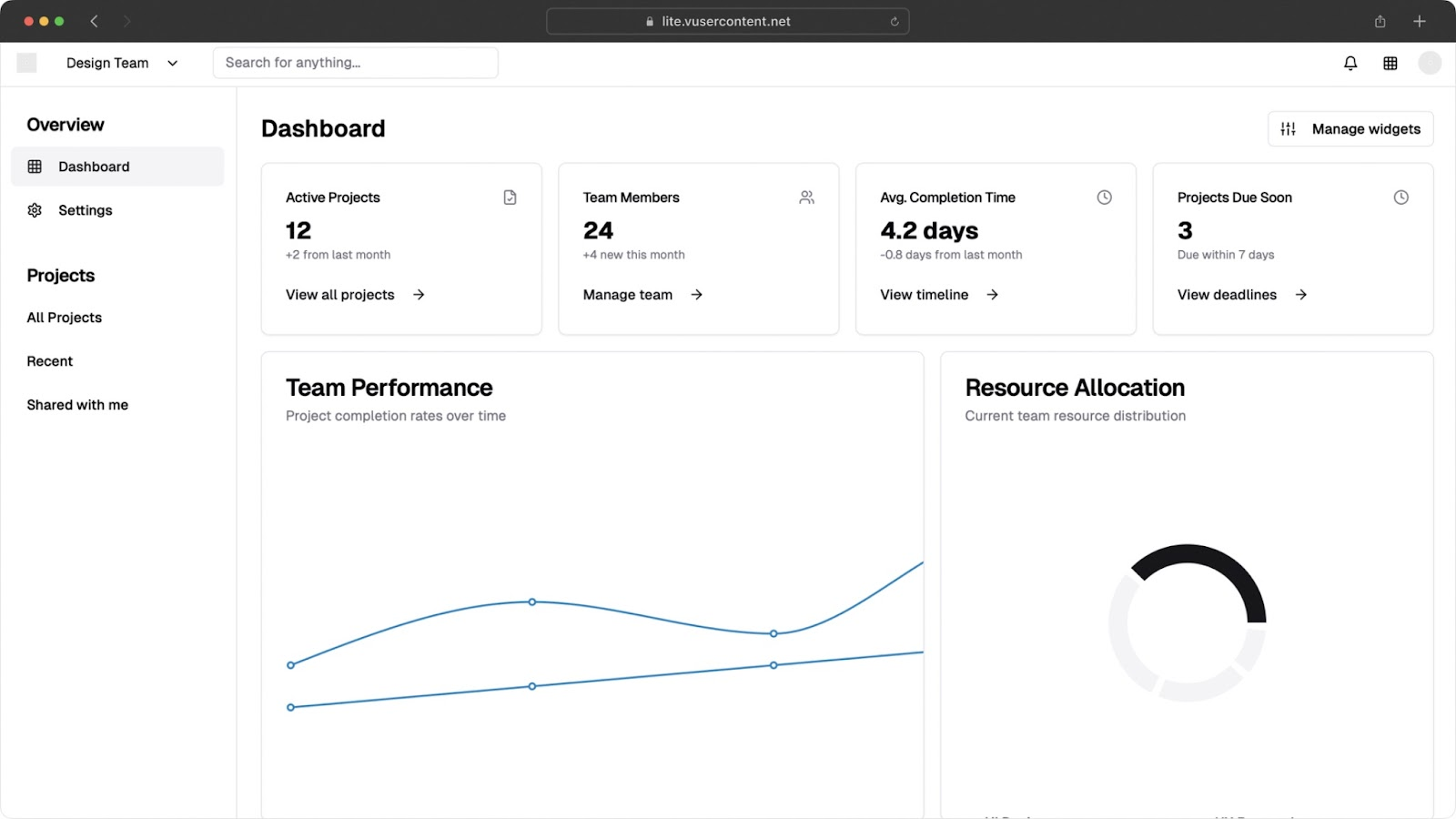

wireframing with words: Writing natural language prompts instead of drawing boxes. It feels odd at first, but it saves a ton of time;

-

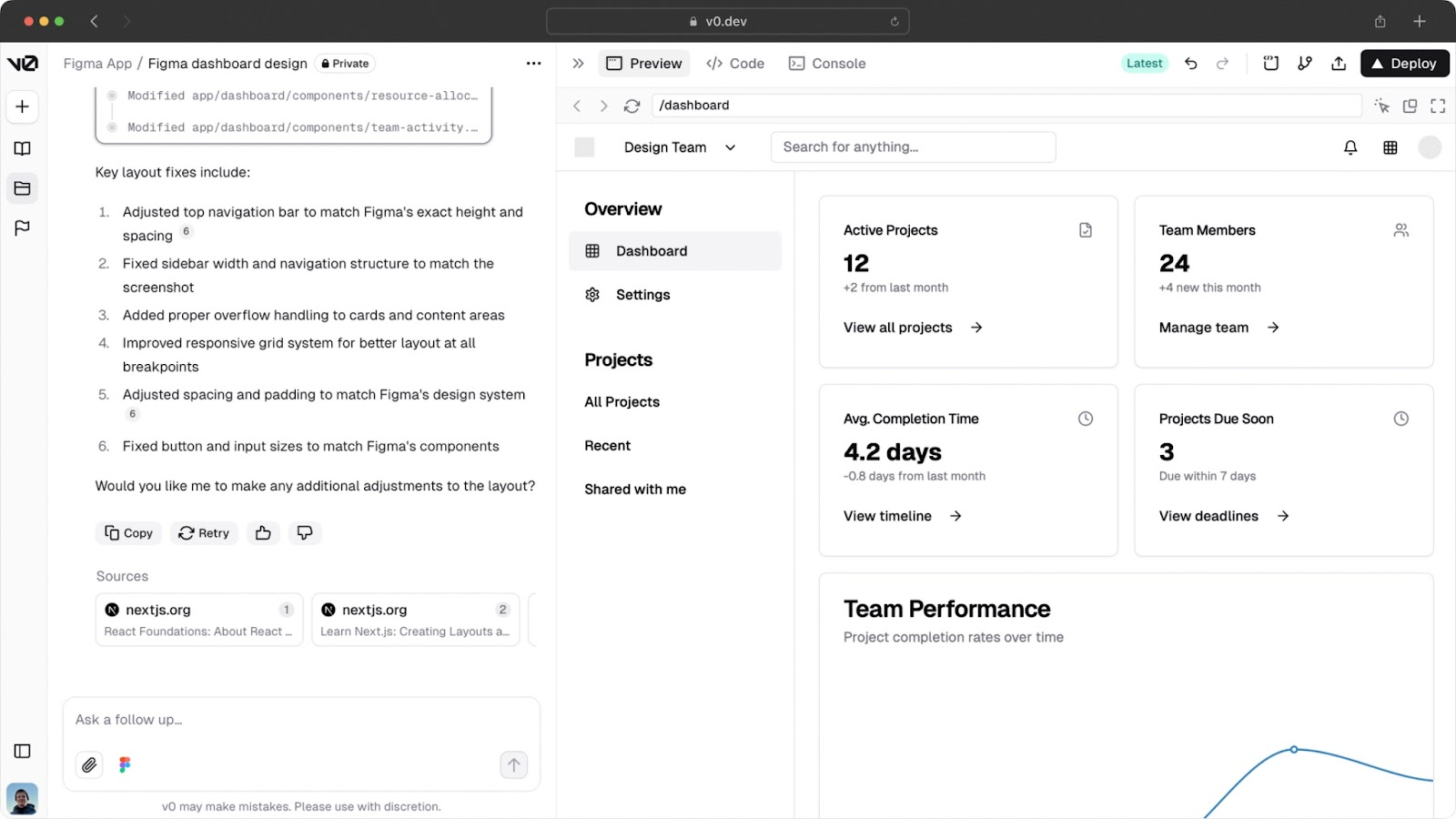

high-fidelity UI: Producing surprisingly polished mockups. Styling is still a bit limited, but it’s evolving fast;

-

complex prototypes: Building multi-step flows or even full mini-projects by just chatting with the AI. You don’t need deep coding knowledge, just a basic sense of how components should interact.

Live assistance

This is still a newer area for designers, but when it works, it honestly feels like magic.

Instead of treating AI as something you “check in with” after the fact, you can have it side-by-side while you work.

Here are two of my favorite use cases:

-

brainstorming: Sharing your Figma screen with Gemini and bouncing ideas back and forth. It’s like having a teammate in the room, except this one can instantly suggest alternative layouts or flag technical constraints;

-

language coaching: Using ChatGPT as a personal English tutor. Super handy if you’re working with global teams and want to get more confident in communication.

An approach that always works

After using AI daily for design, I keep coming back to one simple loop that delivers solid results no matter which tool you’re using. Think of it as the “AI best practices” checklist:

-

work in English: It’s simple. Most AI models are trained on English content. You’ll get richer, more accurate answers in English than in any other language.

-

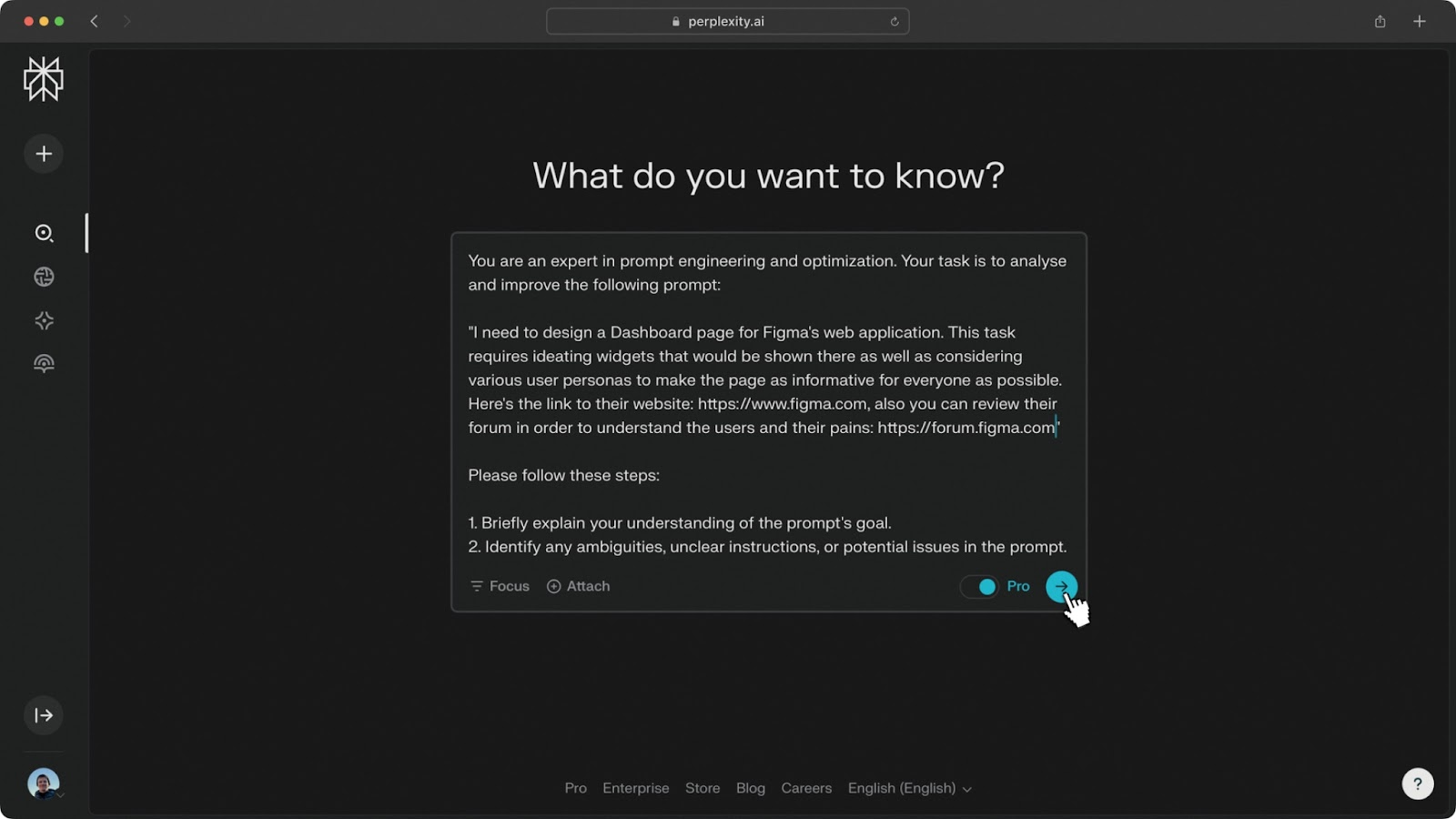

write the most detailed prompt you can: Bad outputs usually come from vague inputs. Even though AI is improving at filling in the gaps, the more context you give, the better the results.

-

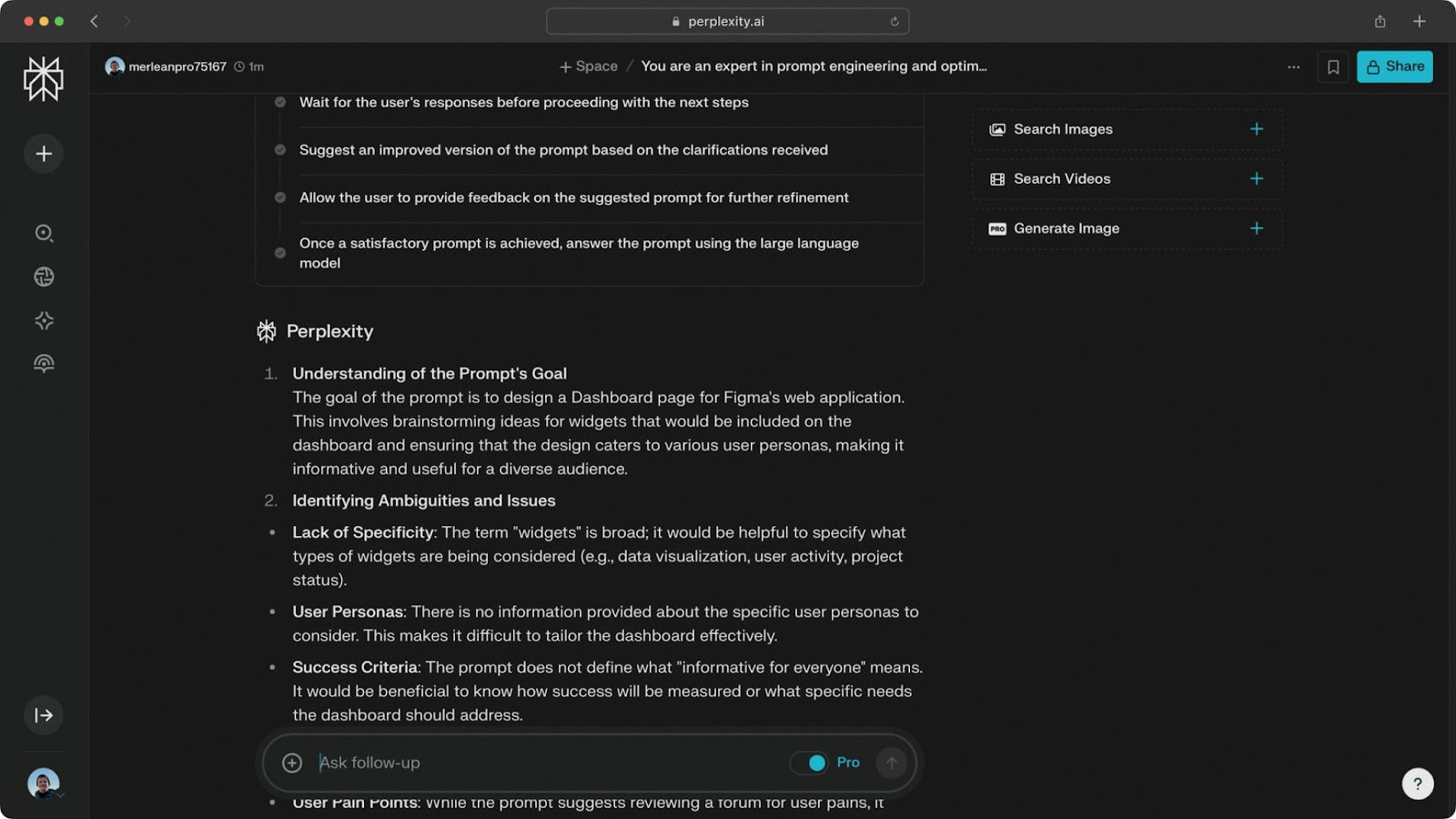

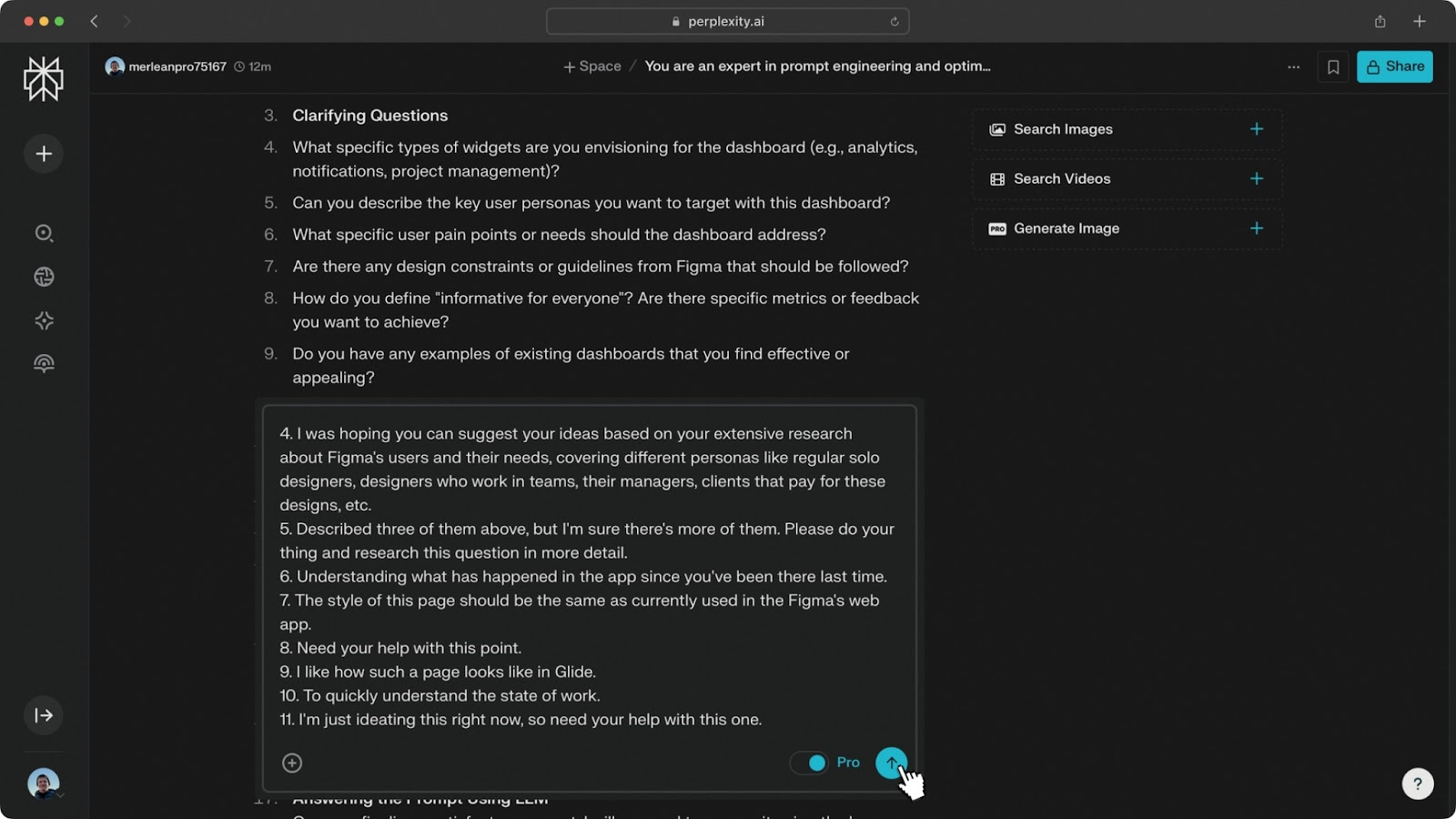

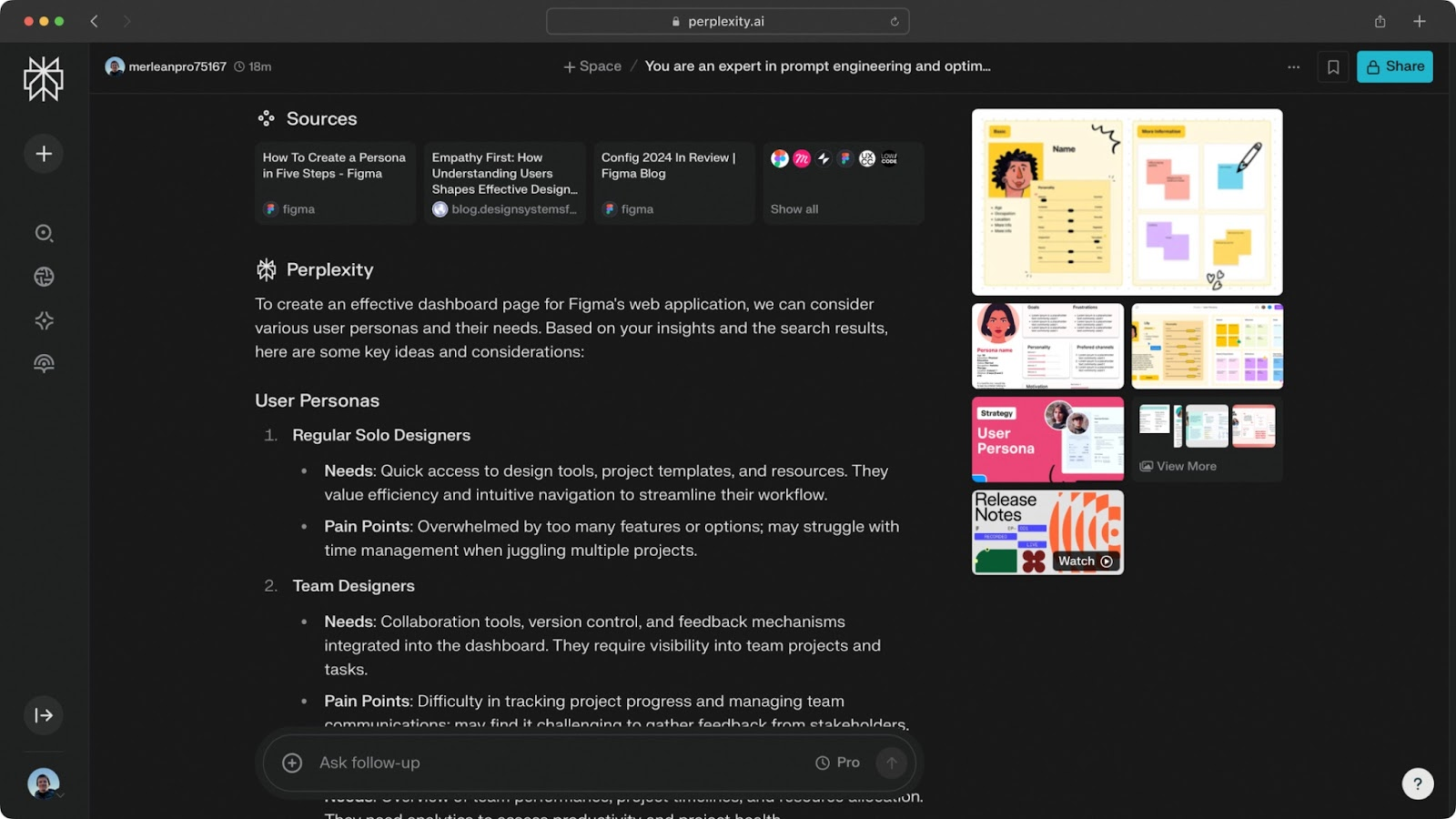

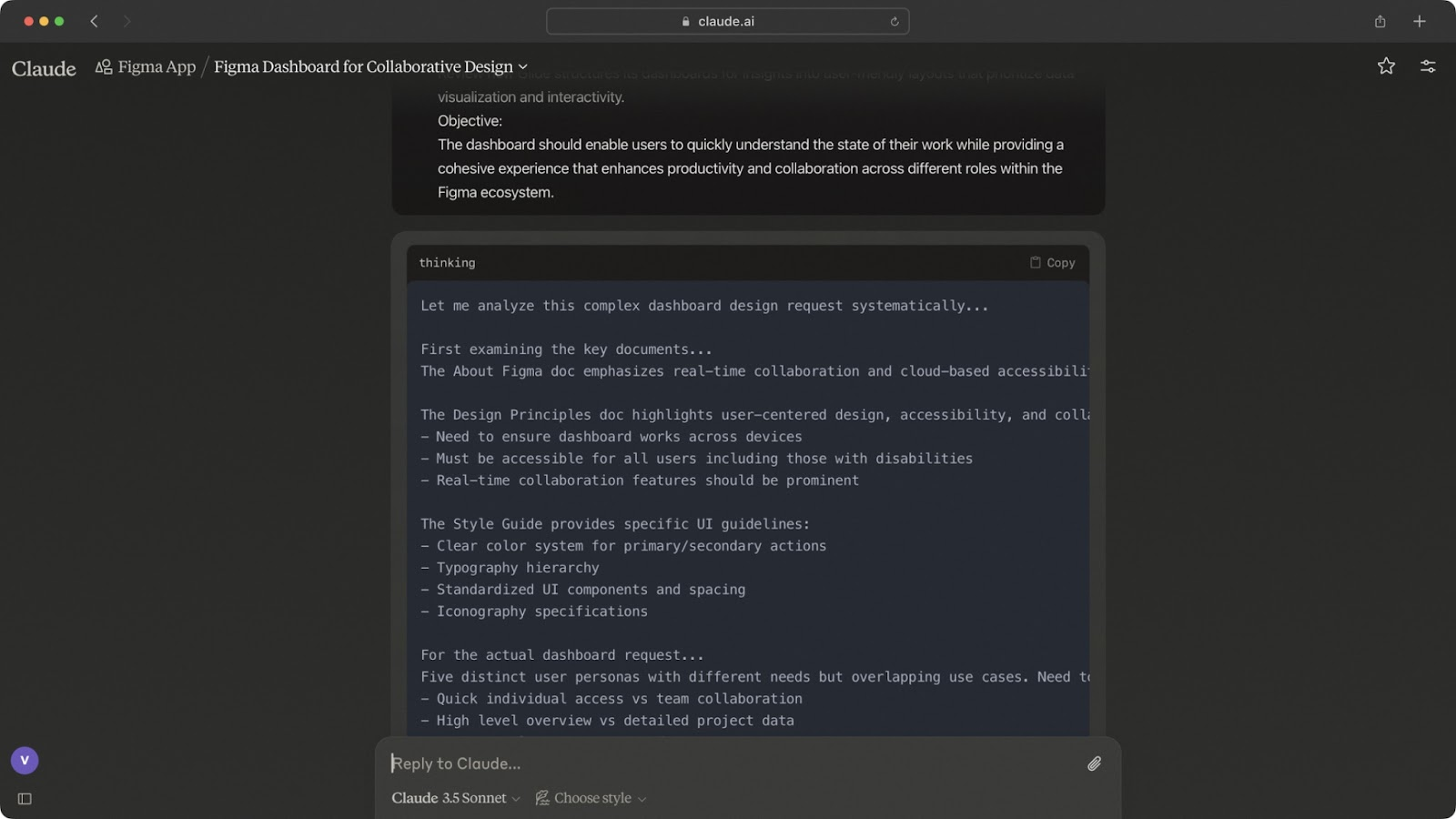

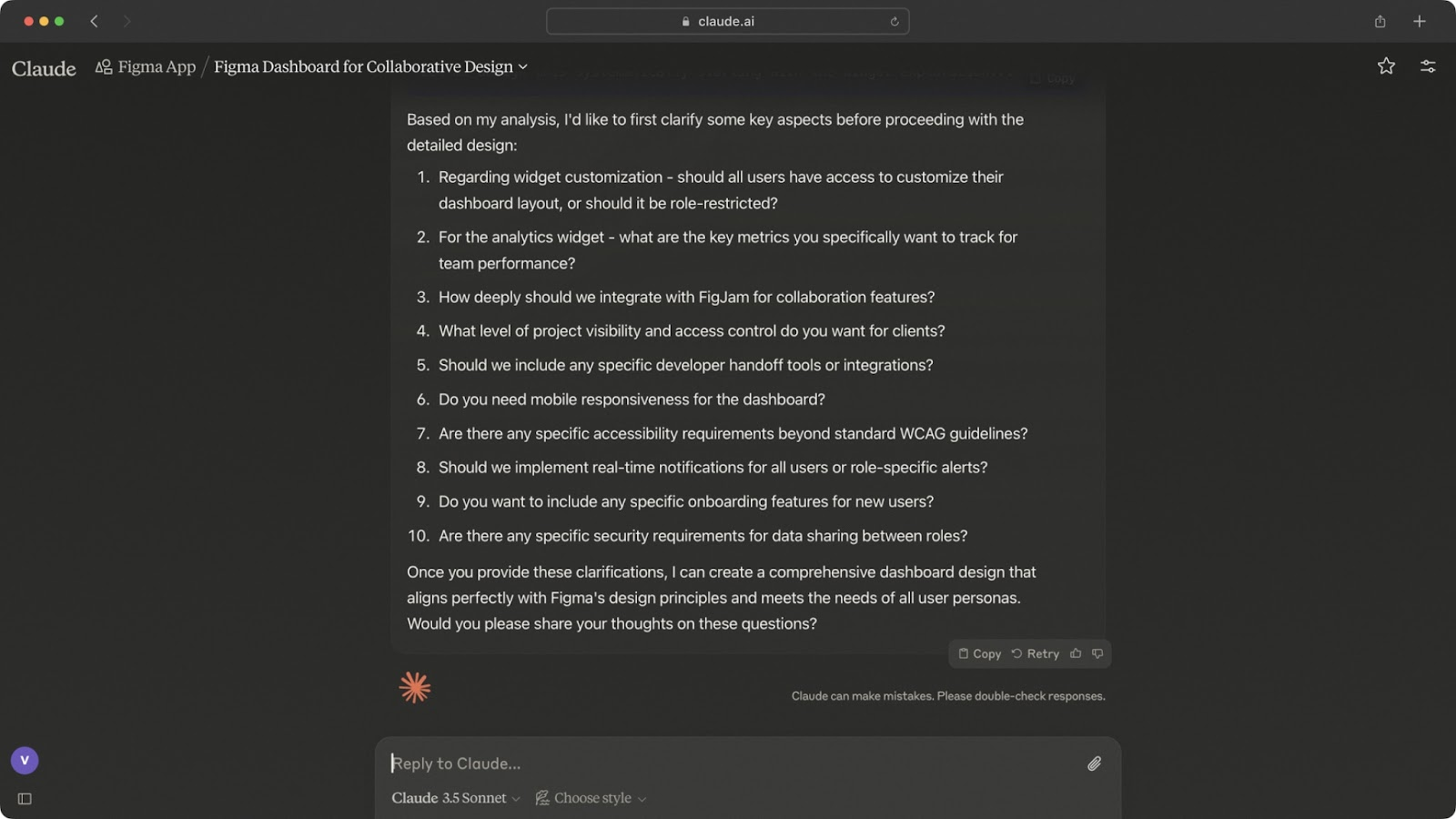

let the AI interview you: Send your prompt to Claude or Perplexity and ask it to come back with clarifying questions. It forces you to think about details you might have missed, while giving the model the exact context it needs.

-

answer thoroughly: Don’t rush this part. About 7-10 questions are usually enough to cover the edges without wasting time.

-

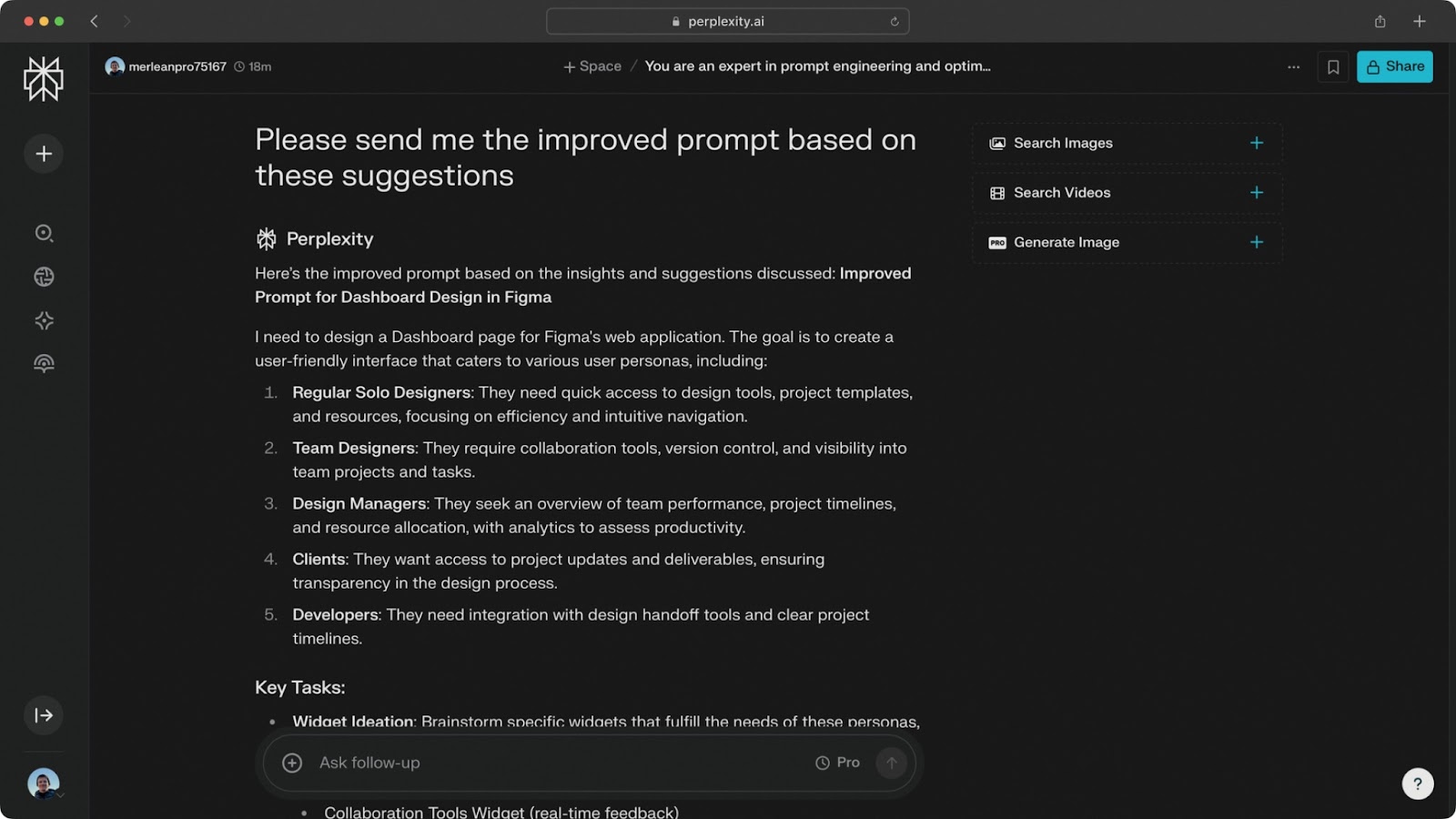

run the improved version: Feed the refined prompt back in. The output will be clearer and much more useful.

This loop turns your AI design assistant from a guessing machine into a collaborator. Instead of throwing half-baked prompts at it and hoping for the best, you’re building context together, and that’s where the real value shows up in design work.

Practical example #1: Prompt engineering with Perplexity

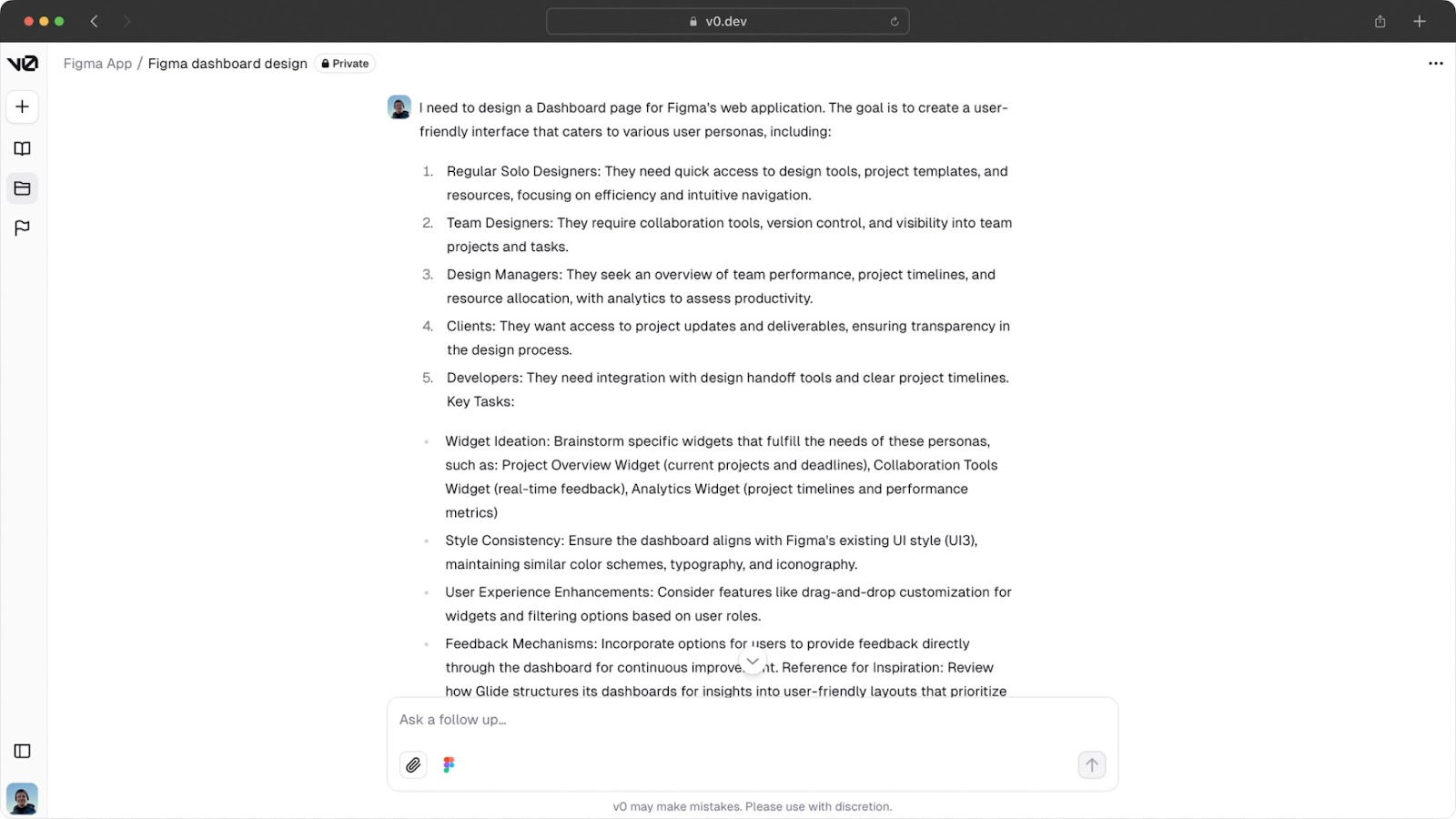

One of the easiest ways to sabotage AI is a weak prompt. If you just type something like “Create a dashboard design” into Claude, you’ll get a generic result that still needs tons of iterations. It wastes time and barely scratches the surface of what AI can do.

That’s why I started using a simple but powerful method I call prompt engineering. It works in any AI chat tool, but I’ve had the best results with Claude and Perplexity (especially when the prompt includes links or needs live web data).

Here’s the framework I use: