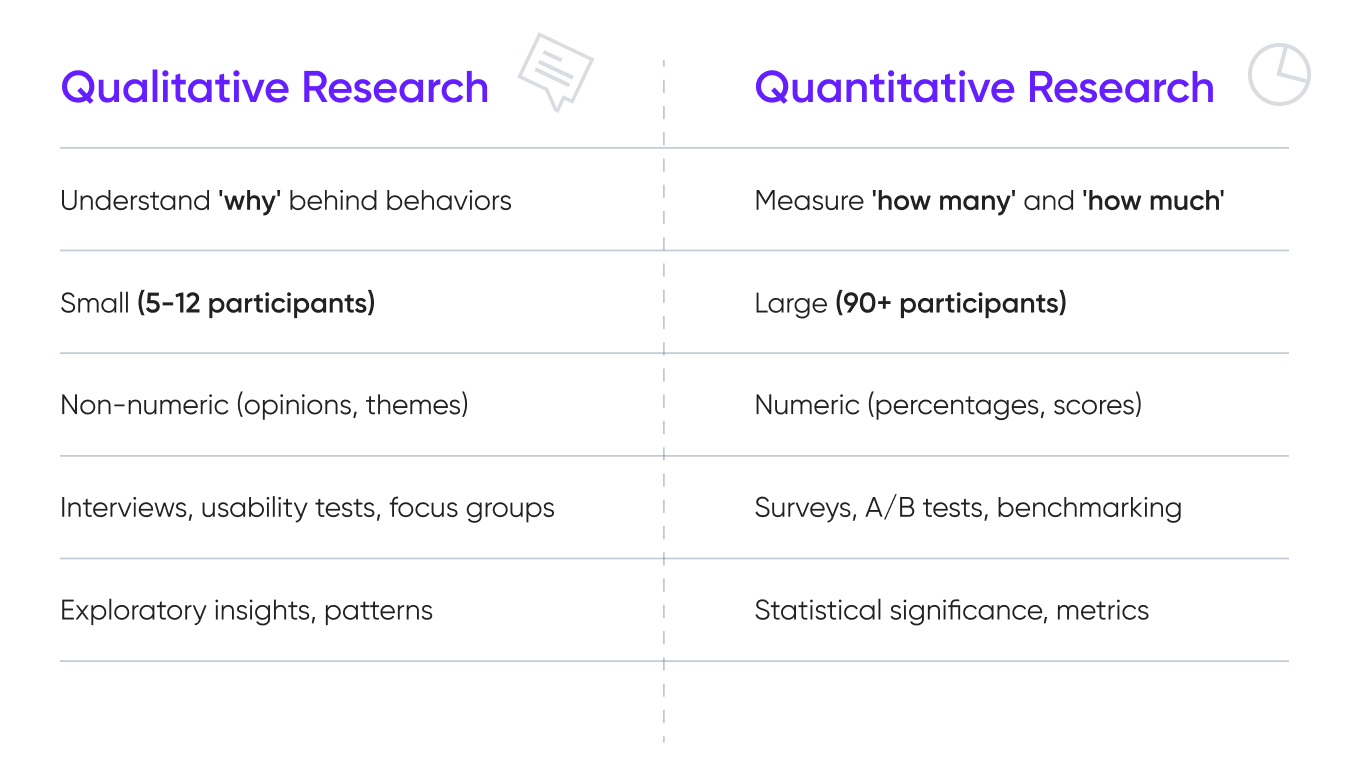

You don’t need a stadium full of people to figure out if your app needs work.

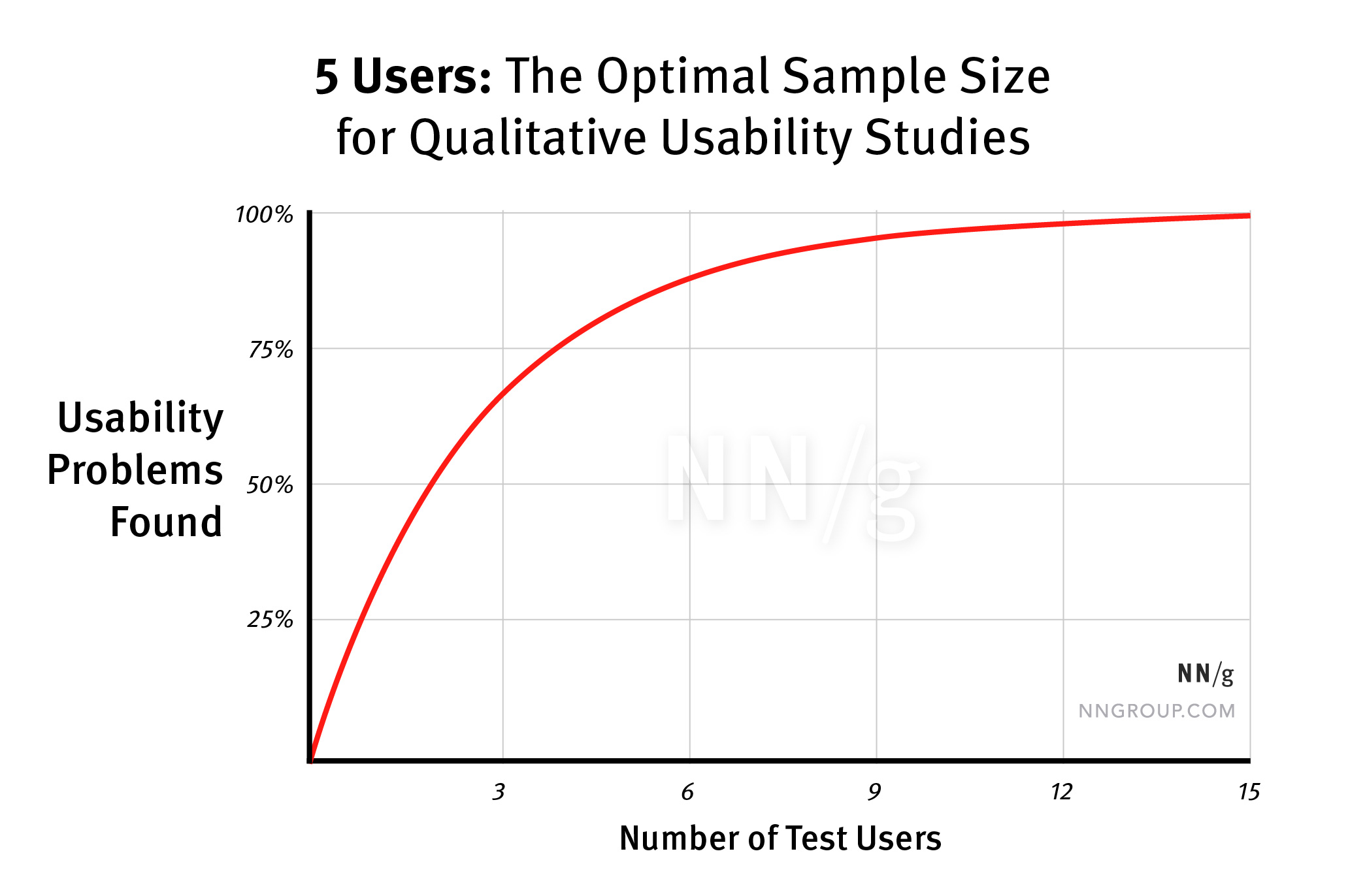

Five users can spot the same bugs 50 would. Ten interviews can reveal what’s broken in your design. A well-run survey with 100 participants can tell you what your entire user base thinks without breaking the bank.

The trick is knowing exactly when you need precision and when smaller, focused samples will do the job just as well. This guide is your cheat sheet to saving money and getting the information you need to build products that work and wow.